17047

•

11-minute read

One of the major challenges of SEO is dealing with search algorithm updates. The minute it feels like you have fine-tuned your website, Google will tweak its ranking algorithm and throw your website off balance. If affected, it could take you months to find the reason your rankings dropped and to come up with a fix — all the while losing traffic and conversions.

Fortunately, there is a way to lower your risk of suffering from future algorithm updates. You see, since the algorithm is not yet perfect, Google hires quality raters to help steer the algorithm in the right direction. Quality raters are regular internet users, who are asked to evaluate the quality of search results and see whether the algorithm is doing a good job. They participate in small scale experiments before the updates are rolled out to the general public.

The raters are given the document called Quality Raters Guidelines (QRG) — a collection of metrics that can be used to evaluate content quality. This is basically a list of ranking signals that Google wants to include in its algorithm at some point in the future but hasn't yet learned how, so, for now, these signals have to be evaluated manually.

Undoubtedly, it is only a matter of time before Google learns to measure these signals algorithmically and they become official ranking factors. So, why wait? You can future-proof your content by studying the QRG, identifying upcoming algorithm updates, and adjusting your SEO strategy accordingly. And, just in case you don't feel like reading a 170-page document, I've put together a list of search optimization tips inspired by the Quality Rater Guidelines.

One of the first steps in evaluating page quality is assessing the reputation of the entire website on which the page is located. To do this, the raters are instructed to look for reviews, news articles, business directories, wikipedia posts, and other independent sources of information, and see what they say about the website and/or company. In fact, Google does measure some of these reputation metrics algorithmically and there is a way you can stack them in your favor.

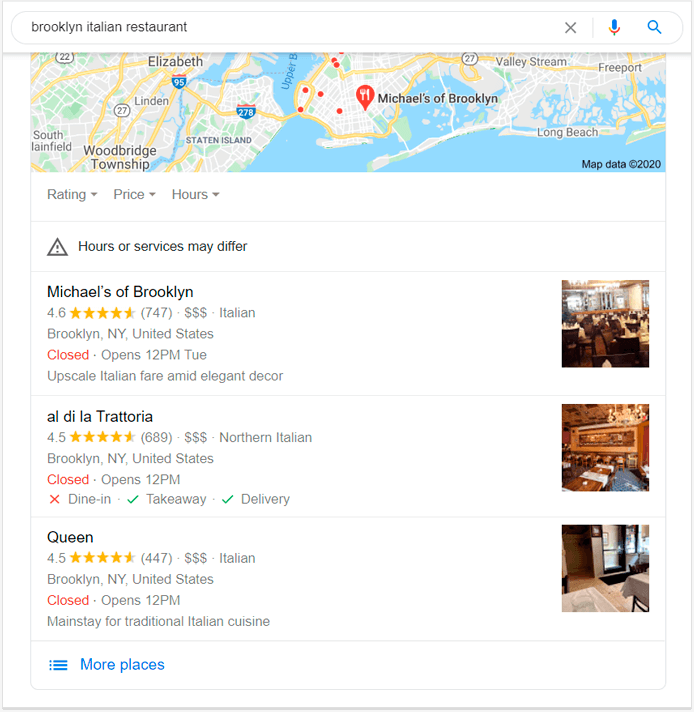

Google representatives have mentioned in the past that, for a variety of reasons, the algorithm cannot use reviews from third-party business directories, like Better Business Bureau. At the same time, Google definitely uses reviews from its own directory, Google My Business, to rank local search results and, strictly speculative, who is to say that GMB reviews are not factored into general rankings:

With this in mind, it would definitely be of some benefit to encourage your customers to leave GMB reviews and to maintain high ratings by handling negative reviews. GMB reviews can be edited, so try to deal with the concerns of unhappy customers and see if you can get them to change their rating. Even if you do not succeed, other users reading your reviews will see your customer care effort and will be left with a more positive impression of your company.

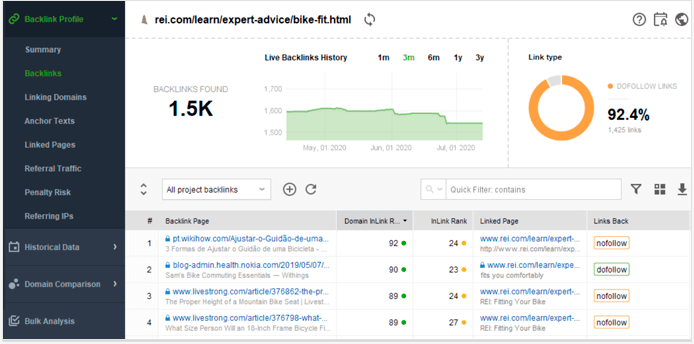

Google's PageRank is one of the oldest technologies used to rank search results. It estimates the number and the quality of links to a site in order to determine its importance. It is similar to how raters evaluate website reputation, except PageRank does not read what other websites say about you — all it cares about are links. Which makes your job easier — place your links with reputable websites, cut ties with low authority websites, and try to maintain a favorable backlink profile.

And with a reliable backlink software, like SEO SpyGlass, you will be able to do just that. It replicates the PageRank algorithm, calculates your website's reputation score, and tells you whether you need to make some adjustments. You can also use SEO SpyGlass to get a list of your backlinks and identify the ones that are hurting your score, as well as to spy on your competitors and find new backlink opportunities:

The QRG document instructs raters to explore the website for the signs of trustworthiness. The signs can be different for different types of websites, but the bar is higher for e-commerce and Your Money or Your Life (YMYL) websites — life-altering decisions do require a higher level of trust. Here are some indicators of trustworthiness as stated in the Quality Raters Guidelines:

Generally, the websites are considered to be more trustworthy when they have transparent policies and provide all appropriate customer service pages: about, contact us, support, FAQs, terms & conditions, delivery, return policies, editorial policies, and so forth.

Users will be more inclined to trust a website when they see other users being engaged via meaningful comments and reviews. As a webmaster, make sure to encourage user engagement by actively asking for customer feedback, sending follow-up emails, and maybe even rewarding active users through your loyalty program.

It feels like a secure connection should go without saying, but I do still encounter quite a few HTTP websites that I'd rather they be HTTPS. If you deal with any kind of personal information, and especially if you process payments, please make sure that your website provides a secure connection.

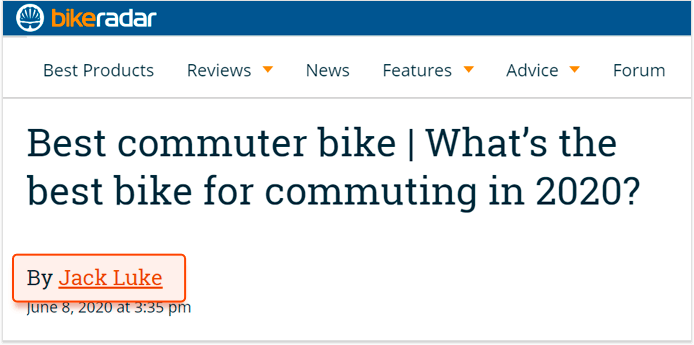

When the author of the content can be established, the raters are instructed to investigate their background. To do this, raters will google the author's name and look through their public profiles as well as evaluate their content on other websites. It is not always required, but most types of content would benefit from authors who are recognized as experts in their fields and have created many other pieces of content on the same topic.

In case the author expertise ever becomes an official ranking factor, you can take advantage of it by using Schema markup to tag your authors, and by creating author profiles on your website. An extra step would be to ask your authors to buff their public profiles (LinkedIn, Facebook, Twitter, etc.) so as to improve their overall presence on the web.

I have recently spotted a good example of this type of optimization on BikeRadar, where each article has a clickable author name:

Which leads to an author profile, complete with a photo, a job title, a social link, a brief bio, and a long list of other articles written by the same person:

According to the QRG document, it is important for the amount of content on the page to be satisfactory. The exact meaning of satisfactory will of course depend on the type of the page and its purpose.

For a cheap product, a satisfactory amount of content would be one or two pictures, a brief description, and a price. For a premium product, a satisfactory amount of content would be a dozen photos, a tech spec, a detailed description, and maybe even a few links to additional sources, like manufacturer websites and third-party reviews.

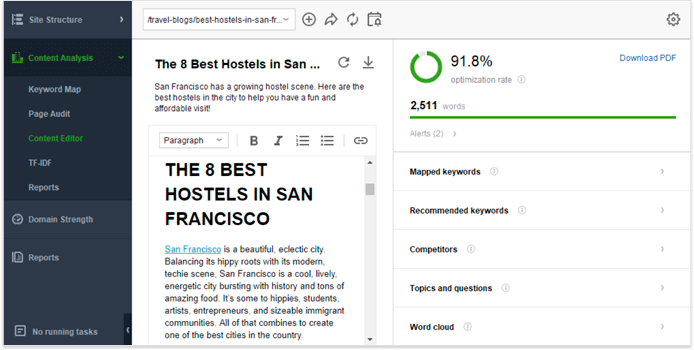

If you don't want to play the guessing game, you can use the Website Auditor to benchmark your content against your top-ranking competitors. All you need to do is go to its Content Editor module, add your main keyword, and get a reliable writing brief complete with recommended word count, additional keywords, questions and topics, and a few more content creation features:

The QRG document states that creating good content should take a significant amount of time, effort, expertise, and talent, and it instructs raters to evaluate copy based on these quality indicators:

Even though Google denies using grammar as a ranking factor, in its QRG document it repeatedly asks raters to pay attention to spelling and punctuation errors, as well as word choice, sentence structure, and evidence of the editorial process. While it may not be a ranking factor yet, it is obvious that Google values clear writing and it wouldn't hurt to proofread your content or to use proofreading software (for my articles I use Grammarly Chrome extension).

The highest quality content should be unique in a way that cannot be replicated by other content creators. It could mean obtaining your own data, having exclusive access, sharing a one-of-a-kind experience, manufacturing unique products, or providing unique services.

In case your content is not unique, then it should at least be original — a fresh take on a popular topic, a more comprehensive material, or anything that would add significant value compared to other similar pieces of content.

For many topics, but especially for YMYL topics, it is important that the content is factually accurate. This means your content should not promote conspiracy theories or make unsubstantiated claims, and you should always strive to include links to credible sources.

While the QRG document acknowledges the necessity of ads, it establishes quite a few limitations on what is acceptable. Here are the types of ads that raters are instructed to penalize:

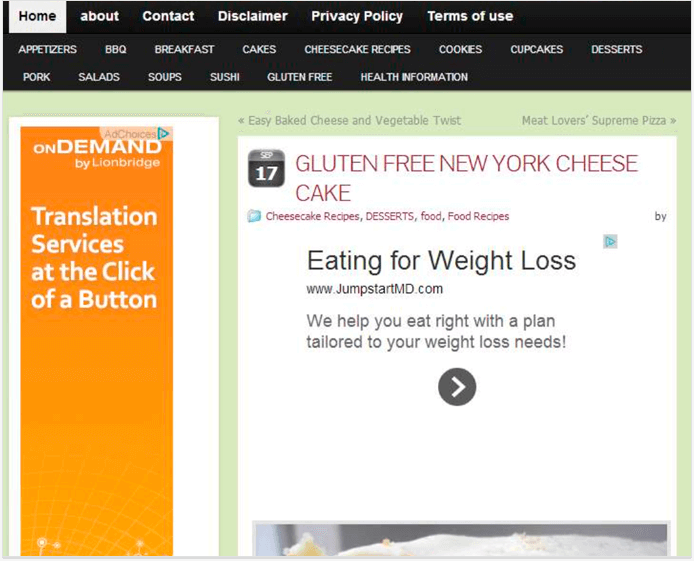

Pop-ups that are difficult to close, ads that interrupt the main content, and ads of excessive size are all considered to be bad user experience and are given a low quality rating. Here is an example of one such ad placed right below the title and pushing main content below the fold:

Ads that are similar to the main content or the navigational elements may mislead users and are also considered bad user experience, bordering on malicious. Below is an example of a Quora ad, which looks exactly like a regular Quora post except for a barely noticeable promo tag:

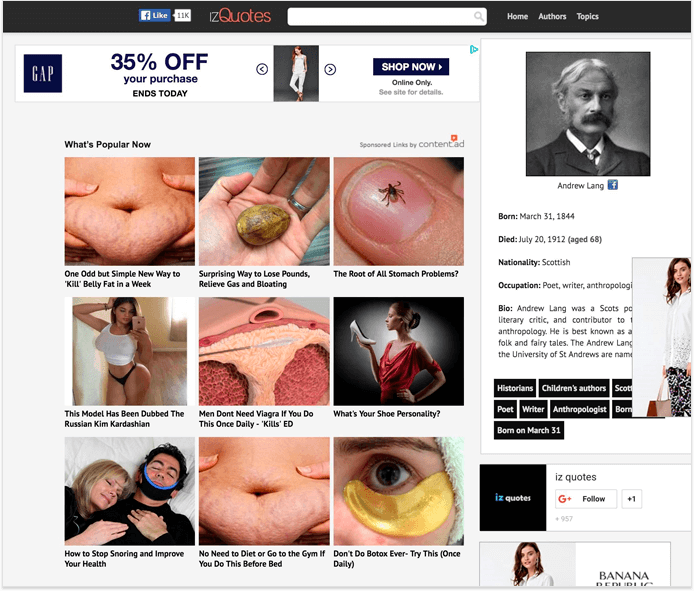

Finally, ads with shocking titles and graphic images are also a cause for a low quality rating and should definitely be avoided. Below is an example of such ads provided by the QRG document:

According to the QRG document, one of the biggest red flags for quality is when a website shows the signs of abandonment. Here are the three areas where these signs may be most obvious:

Lowest quality rating is given to the websites that show signs of technical deterioration, especially if it gets in the way of achieving the website's purpose, e.g. if the checkout button doesn't work at an e-commerce website or if the images do not load at a stock website.

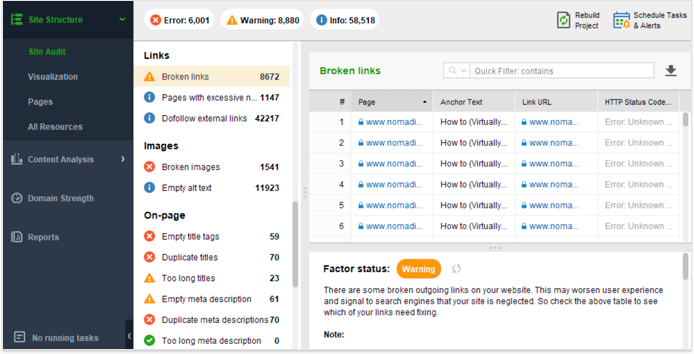

To this end, I recommend using the Website Auditor to track all of your technical issues in one place. The tool audits your website for broken links, broken images, 4xx resources, orphan pages, and faulty redirects, among dozens of other potential issues:

For many queries users would prefer to receive the latest information, so you have to keep your content fresh. Create a schedule for updating your content, add new dates, include fresh images, check for recent developments in the field, check competitor's content for update ideas, and make sure your content follows best writing practices.

A website might be knocked a few quality points for an unkept comment section. Make sure to curate your comments and remove those that look like spam: auto-generated comments, comments with links, unhelpful comments, and malicious comments that may spread sensitive information or offend other users.

It is also a part of the raters' job to look at search results for a particular query and rate them for relevance. So, a large chunk of the QRG describes what Google considers to be a good search result snippet:

A title of the page should summarize the content and create a realistic expectation of what is to be found on the page. Titles that are overly promotional, exaggerated, or shocking are considered to create unrealistic expectations and would normally be given the lowest rating. So, a good practice here is to create titles that include your main keywords, but do not oversell your content.

It should be obvious from your snippet that the page will meet the needs of a particular query. Just by looking at your search result, the user should be able to understand whether the page is going to be a guide, a listicle, a product page, or any other type of page. To this end, make sure to reflect the type of the page in the snippet by using the title, meta description, and Schema markup, if applicable.

Another way you can appear in search results is through a Google My Business knowledge panel. The raters are instructed to give lower quality ratings to those GMB panels that lack key information. Missing business hours and contact details are a very big no-no for most GMB profiles, but, as a rule of thumb, the more information you fill out the higher the quality of your knowledge panel. Make sure to claim, fill out, and maintain your GMB profile — it is a cornerstone of your local search optimization.

Just a quick reminder that most of the SEO tips listed above are not currently ranking factors and are still evaluated manually rather than algorithmically. But, knowing Google, I doubt that it's going to be a very long time before it learns to evaluate search results in the same way humans do. To this end, Quality Raters Guidelines are a great source of ideas for what future ranking factors may look like and what we can do to prepare for them today.