37473

•

12-minute read

There's always been a good deal of confusion in the field of search optimization. Most of the knowledge we gain is by trial and error, and by the time we have figured something out the algorithm has already moved on. So, predictably, a fair share of SEO advice is speculative, contradictory, and hopelessly outdated.

Today, let's look at some of the tactics that still persist despite being proven useless or even outlawed by Google policies. Some of them are no more than a waste of time, while others may actually land you a penalty or lead to sudden ranking drops.

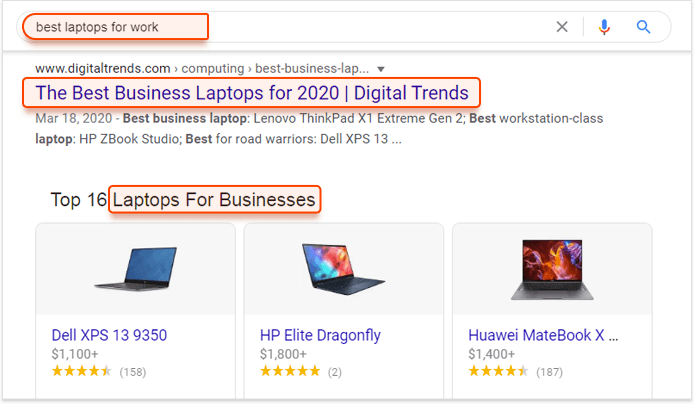

Back when the algorithm wasn't so advanced, even the slightest change of a search query would produce a totally different collection of search results. So, if you wanted to gain search visibility in any topic, you'd have to create a dedicated page for each keyword variation within this topic. Say, if you were selling business laptops, you'd have to create different pages for business laptops, professional laptops, office laptops, and laptops for work.

Since the introduction of the Hummingbird update back in 2013, Google has gotten pretty good at identifying not only synonyms but also searches with similar intent. Today, whether you search for business laptops, professional laptops, or laptops for works, you get a nearly identical collection of pages that match your intent rather than your choice of words:

As you can imagine, creating dedicated keyword pages is now obsolete. Why spread yourself thin trying to optimize a dozen pages for as many queries when all of this SEO effort could go towards creating one highly competitive page. Not to mention that having dozens of nearly identical pages may be viewed by Google as spammy and result in a search penalty.

Develop an SEO content plan that sticks to the "one page per topic, not per keyword" rule. Address the topic of the page by using your target keyword, as well as its varieties, synonyms, and related search terms.

The idea behind keyword density is that in order for Google to understand what the page is about a certain percentage of the copy should consist of keywords. At its worst, it was suggested that as much as five percent of the text should be an exact match of your target keywords. Which is roughly once per sentence.

While keywords are still somewhat important, both exact match keywords and keyword densities are a thing of the past. As far back as 2011, Matt Cutts has warned us that there is no added value in using keywords more than a few times per page and that overusing keywords may negatively impact rankings. Additionally, pursuing keyword density percentages may damage the fluidity of your copy, causing suspicion of both users and search engines and possibly earning you a spam penalty.

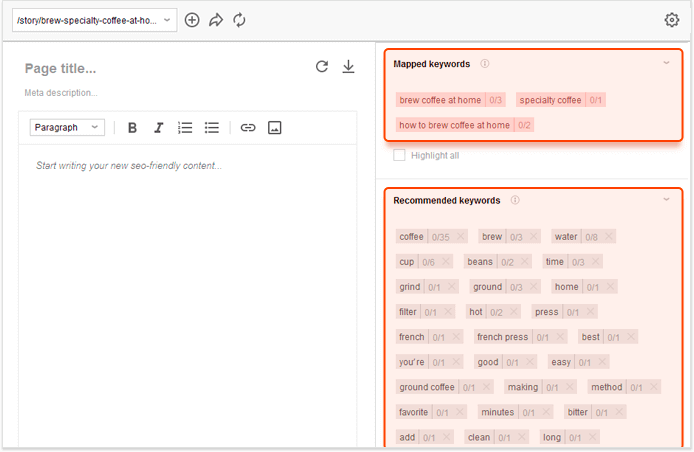

Play it safe and use a dedicated SEO Content Editor to figure out the optimal use of keywords for each given topic. Сontent Editor analyzes the top 20 search results for your query and supplies you with writing guidelines based on what's already working for other websites, including both target keywords and suggested keywords. The editor will also warn you about penalty risk if you go overboard on keyword use.

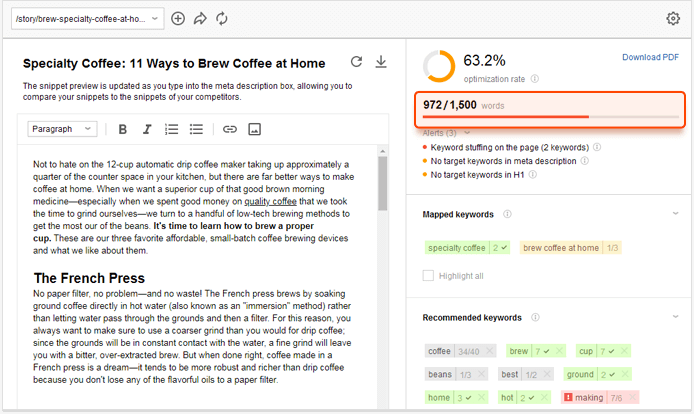

There is a persisting idea within the SEO community that a page has to be of certain minimum word count in order to achieve top rankings. A couple of years ago it used to be 1,000 words, then it went up to 2,000 words, and I believe that we've settled on 1,500 words, for now. There have also been some studies showing that long-form content tends to deliver up to 77% more backlinks than short-form content.

While there is some convincing data on content length and rankings, as usual, correlation does not imply causation. What really matters to both users and search engines is that the content is thorough. For some topics, it could mean 500 words, while for other topics it could mean 10,000 words. Trying to cover either of those topics in precisely 1,500 words would result in poor quality content and correspondingly poor rankings.

Determine your word count on a case by case basis. Better yet, stop counting words and keep writing until your topic is exhausted. Again, you can use a dedicated SEO Content Editor to analyze the pages of your competitors and learn what kind of content length is considered exhaustive for each particular topic:

It used to be the more backlinks you have the higher you rank in search. The only limiting factor was finding enough websites willing to host links to your site. That's where web 2.0 came in very handy. The advance of user-generated content gave SEOs countless new opportunities for link placement, quickly reaching the levels of spam pandemic:

Back in 2005, Google has made it clear it will penalize those websites that have a lot of user-generated spam and encouraged webmasters to take measures against link building. One such measure was the introduction of the nofollow attribute. When added to a link, the nofollow attribute stops the link from passing any authority to its destination page, making the link useless. Since then, the nofollow attribute has been implemented on most Web 2.0 websites and applications, including WordPress, Medium, Quora, YouTube, Flickr, Disqus, and all popular social networks.

While Web 2.0 backlinks are useless for SEO, they still make sense for traffic and content promotion. Having a secondary blog, a presence on a discussion board, or an active social profile are all valid strategies for distributing your content and directing traffic back to your website.

On top of that, some Web 2.0 websites are becoming search engines in their own right. Today, people search YouTube, Quora, Reddit, or Instagram the same way they would search Google. Being present on those platforms is also a type of search optimization.

Another way to generate backlinks at scale is to create your own network of websites and have them link back to your primary website. The allure of this link building technique is that you are in control of the network — you don't have to negotiate with other website owners, and you'll never fall victim to the nofollow attribute. Done right, PBN is one of the quickest ways to boost your rankings.

Google is not a huge fan of PBNs and, if discovered, will not hesitate to slap the whole network with a penalty. Of course, some PBNs are successful at avoiding detection — they hire writers to create high-quality content, spread their IPs around the world, and diversify their backlink profiles. That being said, those PBNs are also quite costly to maintain and most businesses will struggle to break even on this strategy, while still running a risk of eventual penalty and loss of investment.

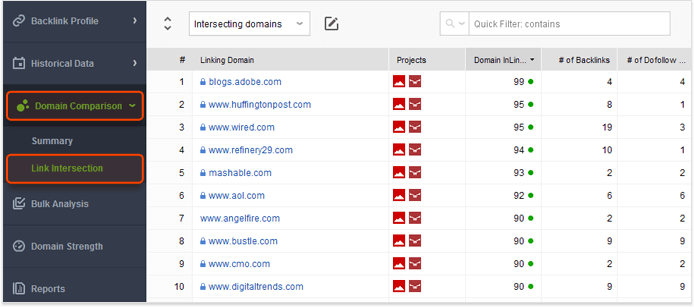

Use SEO SpyGlass to find legitimate link placement opportunities. Go to Domain Comparison > Link Intersection, add two or more of your competitors, and find those websites that have linked to both of them. Apply filters to exclude nofollow links and low authority websites and you've got yourself a list of at least a few hundred prospects. Time to start your outreach.

Yet another way to create a lot of backlinks is via guest blogging. At its worst, guest blogging consists of automated email pitches and word soup blog posts that have absolutely no value for the user — the only point of a guest blog is to sneak your links onto someone else's website.

For one thing, because guest blogging annoys folks over at Google and they've been annoyed for quite a while now. But, more importantly, because Google has learned to track spammy blog posts by looking at content quality, overly optimized anchor texts, and the rate of backlink accumulation, among other things I assume. So today, low-quality blog posting is useless at best and a penalty waiting to happen at worst.

Guest blogging is still a valid link-building strategy, but only if done right. Again, you can use SEO SpyGlass to identify high authority websites that already host links to a few of your competitors, reach out to those websites in an authentic fashion, and offer to write a post that will add real value to their blog. Slow and steady wins the race.

Anchor texts optimized for exact keyword matches used to be considered essential for signaling link relevance and thus widely used by SEO professionals and content creators. Here is an example of what Google considers to be optimized anchors:

Exact-match anchors are no longer necessary for signaling backlink relevance — Google has gone beyond anchor text and is now using surrounding text, as well as larger context, to establish link importance. Furthermore, overly optimized anchors may be seen as unnatural and a part of a link scheme, warranting a penalty.

There is no strict standard for creating anchor text, but, in general, it should strike a balance between being descriptive, but not overly optimized. Appropriate anchor formats include branded anchors (example: Link Assistant), naked anchors (example: www.link-assistant.com), and long-form anchors (example: link schemes as defined by Google's Quality Guidelines).

While most of us know plagiarism is considered an unforgivable offense in the SEO landscape, some websites still try to copy, scrape, or copy content from other sources. Generally, it is done intentionally to drive traffic and make a particular web page appear on the top of SERPs (Search Engine Result Pages), it may also occur accidentally. Google and other search engines trying to ensure top rankings of high domains with quality content recognize it as a black hat SEO practice.

As mentioned earlier, search engines consider the act of posting plagiarized content, done accidentally or intentionally, an offense. You may end up enduring penalties imposed by search engines, including lower rankings and deindexation of web pages featuring plagiarized or scrapped content.

A better way to drive more traffic to your web pages is to pay attention to the creation of unique and valuable content. To ensure the originality of content posted on your web page, it is necessary to check for plagiarism before publishing the content. You can do it using an efficient plagiarism checker equipped with an advanced algorithm and an extensive database. Doing so will help you ensure optimum SERP rankings of your web pages and an undamaged reputation of your domain.

In August 2018 Google had released a major algorithm update that seemed to disproportionally affect medical websites, which lead to a widespread belief of Expertise, Authority, and Trustworthiness (E-A-T) now being ranking factors. Following this update was a wave of E-A-T optimization advice that included things like hiring reputable writers, optimizing about pages, optimizing T&C pages, updating old posts, spell checking, and obtaining social signals, among many many other suggestions.

While none of E-A-T optimization advice will harm your website, there hasn't been any definitive proof of such optimization having any effect on rankings. To this end, E-A-T optimization may waste resources better spent on proven SEO tactics. That being said, if you have the time, your users will definitely benefit from a more transparent website with clear expertise and authority signals.

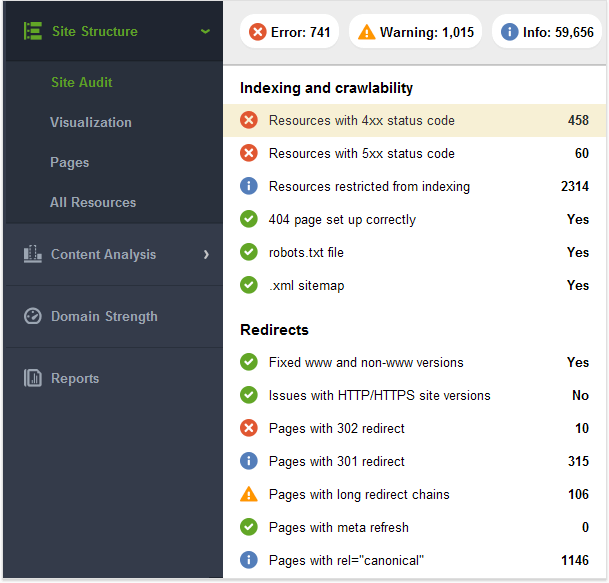

Use WebSite Auditor to run both site-wide and page-specific audit and fix dozens of content and technical issues that have been proven to affect SEO:

AMP is a Google-led open-source framework for developing extremely fast webpages. Early adopters of AMP have seen their load speeds improve dramatically and were given some search advantages by Google. Initially, there were also a number of strict design limitations, which have been gradually lifted.

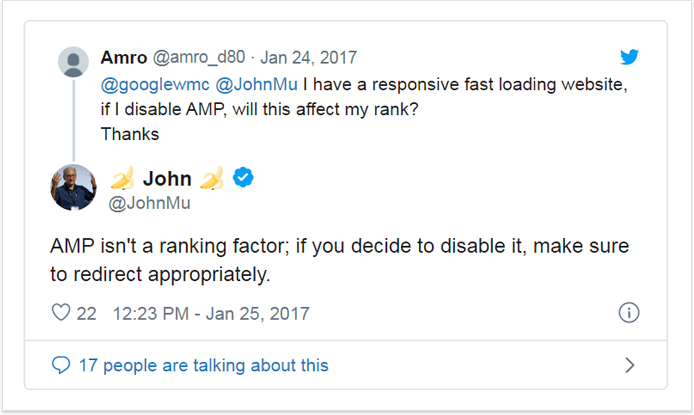

First of all, AMP is not a ranking factor:

Secondly, AMP conversion has had mixed results in the SEO community and many adopters eventually reverted back to non-AMP websites. The current consensus is that AMP is only useful for news and media websites, which are granted prime SERP positions when converted. If you are not a news or a media website, then you are unlikely to experience any benefits from converting to AMP.

Follow best design practices to achieve AMP-like load times without having to convert. A good place to start is to visit Google's page speed tool and see what kind of issues come up for your pages.

Content spinning is about creating new content by making minor changes to the old one. It can be done manually or by using dedicated software to create a mashup of several similar pages. Content spinning comes from a time when quantity was prioritized over quality and a lot of posts were necessary to grow a backlink profile and to deliver a steady stream of content for your own website.

Content spinning results in pages of very low quality, which are easily identified by Google algorithms and are either ignored or penalized. So this tactic sits somewhere between useless and dangerous.

If your primary goal is supplying your own website with content, then choose to update old posts rather than spin them into new ones. Add the current year to your title, a disclaimer at the top of the page, and make sure that all the facts are brought up to date. It takes just as much effort as it would to spin a post, except the quality goes up, and the traffic is likely to be similar to what you'd get from a new post.

Exact match domains, or keyword-rich domains, were once a very strong signal of search relevance. In fact, it was so easy to rank with a query-friendly domain name, that it didn't much matter what was the content of your website. An example of an exact match domain would be something like buyviagraonline.com or bestpizzaplacela.com.

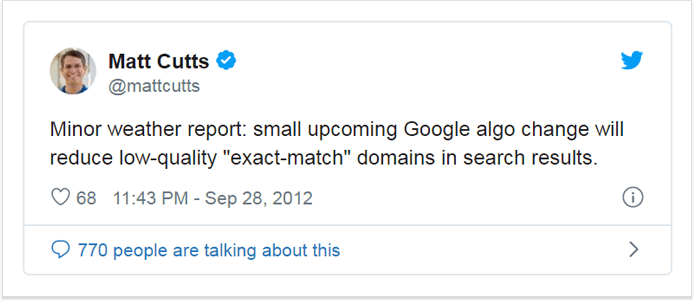

As far back as 2012, but probably even earlier, Google has tweaked the algorithm to ignore exact match domains as a ranking signal and instead focus on content quality:

There is nothing wrong with having an exact match domain and Google will not penalize a quality website for its name alone. But, going for an exact match domain means you are not using your brand name, and you are probably using a keyword phrase that's entirely too long to be a domain name, both of which are user experience issues. I'd say always opt to make it easy for your users and use your brand name for a domain.

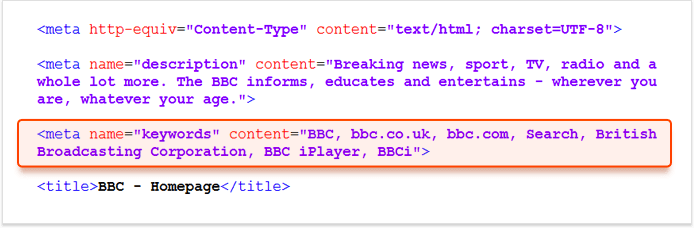

You'd think that keyword tag ought to be an honorable mention by now, but it's pretty much well and alive — still requested by some clients, still present on many websites. For those who've missed it, the keyword tag was an HTML tag that explicitly stated target keywords for the page, like so:

Can you imagine the audacity of SEOs telling Google what their pages are about? Ah, those were much simpler times.

In September 2009 Google has made an official announcement stating it does not use keyword meta tags to rank pages. It does not penalize keyword tags either, so you are free to use them if you want or if your client insists, but it's almost guaranteed to be a waste of time.

The only way to tell Google what your page is about is to create quality content that matches search intent and is semantically sound, which does include some keyword use, although not as much and not in such an obvious fashion as back in the era of the keyword meta tag.

That was definitely one of the more entertaining lists I've had the pleasure of compiling — SEO has certainly been through some fun times in the past. Do you have any other obsolete SEO tactics to add to this list? Or perhaps remove? Let me know ;)

By: Andrei Prakharevich