1074

•

8-minute read

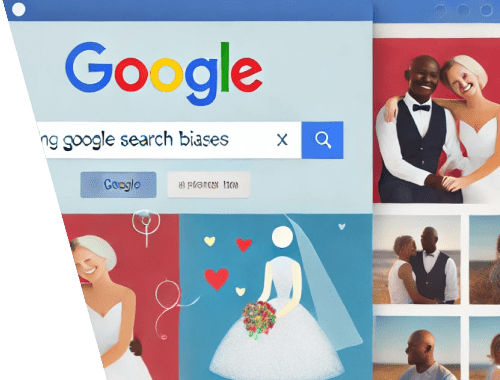

Ever noticed something strange when you search for images on Google? Type in something like “happy white woman” or “white couple” and you might expect straightforward results—images of smiling white women or white couples. But what you actually get can be quite different: rows of polished stock photos, overly idealized versions of happiness, or even a mix of interracial couples in results where you'd expect otherwise.

It’s not a bug, and it’s not some hidden agenda—it’s the result of how Google’s algorithms interpret identity, context, and diversity. These subtle mismatches between search intent and search output raise bigger questions: How do search engines define concepts like race and happiness? And are they unintentionally reinforcing—or reshaping—cultural stereotypes?

Let’s dive into how all of this works, what it reveals about algorithmic bias, and why even simple search terms carry more weight than we think.

Imagine this: you search “white couple,” and instead of just showing white couples, Google throws in a mix, including interracial couples.

This sparked a lot of questions online, with users wondering if Google is intentionally being politically correct or trying to make a statement.

Google’s Danny Sullivan chimed in on the controversy to clarify things.

We don't. As it turns out, when people post images of white couples, they tend to say only "couples" & not provide a race. But when there are mixed couples, then "white" gets mentioned. Our image search depends heavily on words - so when we don't get the words, this can happen.

Turns out, it all boils down to how images are labeled. Photos of white couples are often tagged simply as “couple” without any racial descriptor. Meanwhile, interracial couples might be tagged as “white couple” because the word “white” stands out in the context. Google’s search algorithms prioritize those descriptors when delivering results in image search, which explains the mix.

When you search “happy white woman” on Google, the results may seem surprising—often showing polished stock images or interracial couples, even when that wasn’t your intent. At first glance, this might look like a glitch, or even an agenda. But in reality, it points to something more complex: how modern AI interprets intent and bias.

Google’s image algorithm doesn’t just look for the literal phrase “happy white woman.” It tries to guess why you're searching for it. If, over time, a search term is often used in polarizing or controversial contexts—such as debates about race, representation, or media bias—Google’s AI may start surfacing results that reflect that broader conversation, rather than just the literal keywords.

In this case, what you see is shaped by a mix of cultural input and algorithmic inference. AI models trained on web-wide data may overfit to dominant patterns—such as associating “white woman” with interracial imagery if that association frequently appears in trending discussions, click behavior, or viral content. The system assumes that’s what users are looking for, even if that’s not what every individual intends.

Interestingly, when the same search is done on platforms like DuckDuckGo, the results are far more conventional—closer to what users originally expect. That’s because DuckDuckGo emphasizes content-based taxonomies, while Google leans heavily on AI-driven sentiment and intent modeling. The difference shows how search results are not just about what's out there on the web, but about how a platform interprets user behavior.

Another layer to this is content creation itself. Many of the images that dominate such search terms come from stock photo databases, which tend to portray highly curated, commercialized versions of joy, gender, and race. So while Google plays a role in surfacing those images, the deeper bias often starts with how content is produced, tagged, and fed into the algorithm.

What’s especially concerning is that AI models, unlike humans, can’t explain their decisions. If a human editor selects an image for a magazine, they can tell you why. AI, on the other hand, makes predictions based on statistical correlations in massive datasets—correlations that reflect all the bias, blind spots, and stereotypes already baked into society.

So while the search for “happy white woman” may seem like a minor curiosity, it exposes something much bigger: how AI interprets our queries, how it amplifies certain representations, and how it quietly reshapes what we consider "normal." It’s not just about search results—it’s about the cultural lens being automated.

Here’s the deal: Google doesn’t intentionally add bias to its image search results. The problem lies in how content is created and labeled online. Let’s break it down:

This isn’t just a quirky Google glitch—it’s a reminder of how technology shapes our perceptions. When search results unintentionally reinforce stereotypes or fail to meet expectations, they have real-world impacts.

For example, when searching “white couple,” if users repeatedly see a mix of interracial couples, it can challenge preconceived notions about relationships (which isn’t necessarily bad). On the other hand, stereotypical results like those for “happy white woman” can reinforce narrow societal views.

The short answer is: they’re trying. Google constantly updates its algorithms to tackle issues like this. They’re also looking into ways to better understand context and improve diversity in their results. But it’s a tricky balance—how do you fix biases without stepping on free speech or ignoring how content creators label their work?

Here’s what could help:

Google’s image search results, whether for “white couple” or “happy white woman,” aren’t deliberately racist or biased—they’re a reflection of our society and its content. The responsibility to address these biases lies not just with Google but with everyone who creates, shares, and consumes online content.

So next time you notice something off about a search result, remember: it’s not just Google’s algorithms—it’s us too.