39717

•

12-minute read

Google Search Console is an odd SEO tool. It feels like a somewhat random assortment of search reports. Some are useful, some could be useful if you know what you are doing, and others are just meh — pretty to look at, but no real insights.

On the upside, Google Search Console pulls reliable data directly from Google and is free to use for all website owners. So, if you are motivated enough, and especially if you are on a budget, you can do quite a bit of search optimization with this tool alone. Besides, you can leverage Search Console to diagnose possible reasons behind sudden ranking drops.

In this SEO audit guide, we will discuss some of the more useful Google Search Console features and provide detailed instructions on how to turn them to your advantage.

Check out this video. Mary from our team will carefully explain all the details of how to audit your website with Google Search Console.

To be able to perform an SEO-audit with Search Console, you (obviously) have to add your website to GSC.

Log in to Search Console or create an account, click the upper left drop-down bar, and click Add property.

Then, enter your domain address. You can add the entire new domain or some specific URLs depending on what you want to track.

Finally, you have to verify your website ownership. If you have added a new domain, you have no other option but DNS verification. Although the process may differ for different domain providers, Google Search Console has provided clear instructions for any verification case. Click the grey arrow near the Instructions for line to call the drop-down menu and choose your provider, and follow the GSC instructions.

If you added not the whole domain but a certain URL, you have a wider choice of verification options:

The website verification process on Google Search Console usually goes smoothly, so you can start auditing your website soon enough. Still, some errors may occur. Make sure your server responds properly and your website can be accessed.

Once you have added your website to Google Search Console, you can proceed with an SEO-audit.

The first thing to check when performing any SEO audit is whether Google can access all of the pages that you want to appear in search. There are many reasons Google may be blocked from your pages and sometimes it happens by accident. In which case you'll have some of your important pages just sitting idle, bringing zero traffic, and making your SEO effort worth nothing.

To check whether you have any indexing issues, in Google Search Console go to Index > Coverage and check the status of your website’s pages:

Pay attention to the Error and Valid with warning sections to figure out what’s wrong with these pages and how to fix the issues.

In Search Console, error report displays all the cases when Google hasn't managed to index your pages because they either don’t exist or have access restrictions. For example, the screenshot below demonstrates that the website has two types of indexing errors: Submitted URL marked ‘noindex’ and Server error (5xx).

You can click on each error to get a list of affected URLs. From there, you can click on each URL and have it inspected by Google Search Console. This will result in a quick report on the page’s current indexing status and possible problems. Here are the most common indexing errors and some tips on how you can solve them to bring your SEO strategy back in line:

Submitted URL cannot be indexed. These types of issues happen when you have asked Google to index an URL, but the page cannot be accessed. The first thing to check here is whether you actually intended for the page to be displayed in search or not.

If you don’t want your page indexed, you have to recall your index request so that Google can stop trying. To do that, submit the URL for inspection and see why Google is trying to index it in the first place. The most likely reason is you have added the URL to one of your sitemaps by mistake, in which case just edit the sitemap and remove the URL.

If you do want your page to be indexed, then you have to stop blocking the access. Now, there are six different ways you might be blocking Google, so here is what you can do in each case:

Submitted URL not found (404) means that the page does not exist, and the server redirected to 404 status code. Check if the content was moved, and set up a 301 redirect to a new location.

Submitted URL seems to be a Soft 404. This error appears when your server labeled the page with OK status, but Google decided the page is 404 (not found). This may occur because there’s little content on the page, or because the page moved to a new location. Check if the page has good comprehensive content and add some if it’s thin. Or set up a 301 redirect if the content was moved.

Submitted URL blocked by robots.txt error can be fixed by running the robots.txt tester tool on the URL, and updating the robots.txt file on your website to change or remove the rule.

Submitted URL returns unauthorized request (401) means that Google cannot access your page without verification. You can either remove authorization requirements or let Googlebot access the page by verifying identity.

Submitted URL returned 403 error happens when Google has no credentials to perform authorized access. If you want to get this page indexed, allow anonymous access.

Once you’ve removed whatever was blocking the access, submit the URL for indexing using the Google Search Console URL inspection tool.

Server error (5xx) occurs when Googlebot fails to access the server. The server may have crashed, timed out, or been down when Googlebot came around. Check the URL with the Inspect URL tool to see if it displays an error. If yes, check the server, see what Google suggests to solve the problem, and initiate validation once again if the server is fine.

Redirect error may occur if a redirect chain is too long, a redirect URL exceeds the max URL length (2 MB for Google Chrome), there’s a bad URL in the chain, or there’s a redirect loop. Check the URL with a debugging tool such as Lighthouse to figure out the issue.

If Google indexed your page but is not sure if it was necessary, then Search Console will label these pages as valid with warning.

In terms of SEO, warnings may bring you even more trouble than errors, as Google may display the pages that you didn’t want to show.

Indexed, though blocked by robots.txt warning appears when the page is indexed by Google despite being blocked by your robots.txt file. How to fix this issue? Decide on whether you want to block this page or not. If you want to block it, then add the noindex tag to the page, limit access to the page by login request, or remove the page by going to Index > Removals > New request.

Note: many SEOs mistakenly assume that robots.txt is the right mechanism to hide the page from Google. This is not true — robots.txt serves mainly to prevent overloading your website with requests. If you block the page with robots.txt, Google will still display it in search results.

Indexed without content means the page is indexed, but, for some reason, Google cannot read the content, which is definitely bad for SEO. This may happen because the page is cloaked, or the format of the page is not recognized by Google. To fix this issue, check the code of your page and follow Google’s tips on how to make your website accessible for users and search engines.

A more comprehensive technical SEO audit would include checking and fixing many other issues that cannot be detected with Search Console reports. Such issues may include broken images or videos, duplicate titles and meta descriptions, localization problems, and many others. If you want to run a full-scale technical SEO audit of your website, then consider using WebSite Auditor. This SEO tool covers all of the indexing issues discussed above as well as many other areas of technical optimization.

To run a technical SEO audit in Website Auditor, go to Site Structure > Site Audit, and the tool will provide you with the information on all the types of issues that you need to fix.

Download WebSite AuditorThe report layout is fit on one screen, providing you with the information on all the detected errors and SEO hazards: error type with a detailed description, location, and the best practices on how to solve the problem. Unlike with Search Console, the entirety of your audit can be performed from a single dashboard — no need to jump from screen to screen in search of a solution.

Poor user experience leads to lower levels of engagement and higher bounce rates. As user activity declines, Google may decide that your pages are becoming less relevant. And when it happens, your positions in search start dropping. Moreover, user experience is a ranking factor, so UX optimization is a must for your SEO strategy. Luckily, Google Search Console provides us with a bunch of UX reports, so we can fix any issues before we get in trouble.

Core Web Vitals represent speed, interactivity, and visual stability of a page during loading. Ideally, Google wants all of your pages to load and become interactive in under 2.5 seconds. You can use the report to see which of your pages can hit this benchmark successfully.

Once you open the Core Web Vitals report, GSC will show you how many of your pages need changes, and give you a list of discovered issues:

The algorithm for investigating all of the issues is the same: click on the issue > click on the URL > go to PageSpeed Insights for a more detailed report and possible solutions.

Note: Google’s page speed benchmark of 2.5 seconds is hard to reach for most websites. In fact, the average page speed across the web is currently at 18.8 seconds. This means you don’t have to be as fast as Google says, you just have to be slighter faster than your competitors. To figure out what your real benchmark is, check your competitors’ websites using PageSpeed Insights.

Mobile Usability report of Google Search Console shows you the issues that mobile users experience while interacting with your website.

Google Search Console briefly describes all the errors, so you can easily understand what to fix, and how. To get more insights, click on the issue to see the pages affected. Then click on the URL, test your page to see all the errors, and get useful tips on how to solve them.

Once you fix errors, click the Validate fix button to initiate validation and remove the error from the report.

Google Search Console will usually have a number of other reports under the Enhancements tab. The types of reports you see there will depend on what kind of structured data you are using on your website.

You can see in the screenshot below that the website we are using for this example has four types of structured data (FAQ, logos, products, and reviews). There is also a separate report for unparsable structured data, which means that there is some structured data that Google cannot recognize because of a syntax error:

The algorithm is the same — click on the report, check out the details, and find the right solution. GSC explains everything here in plain English, so if there’s a missing image property, the system will clearly state Missing field “image”. Literally.

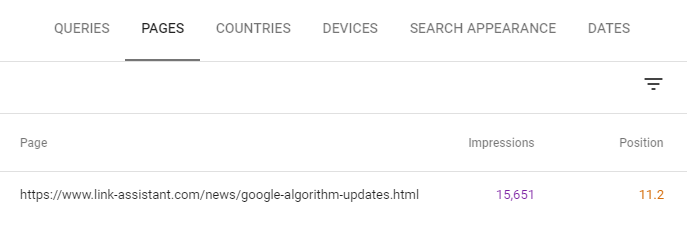

Google Search Console tracks current ranking positions of your keywords. You can use these insights to find the keywords on the verge of occupying high traffic positions in SERP. A little effort in optimizing these pages will go a long way in boosting your traffic.

To find underperforming keywords in Search Console, go to Performance > Search results, enable Total impressions and Average position, and scroll down to the table of queries.

Now you have to decide which positions to consider ‘underperforming’. Some SEOs say that moving your keywords from positions 4-5 to positions 1-3 will bring you the most benefit. Of course, this will require comprehensive optimization of your pages due to high competition. Others believe that moving from page two to page one is a better investment of your resources. In this case, slightly optimizing the performance of keywords ranked 11-15 would be enough.

Set up filters to show underperforming keywords. Click on filter > position > greater than, and set the cutoff point of either 3 or 10, depending on your strategy. You can also filter your keywords by impressions. If a keyword is currently in position 5, but gets 20 impressions per month, there is little to be gained from moving this keyword to a better position. Click filter > impressions > greater than to eliminate keywords with very low impression count.

Now Search Console gives you the list of underperforming keywords with the highest potential. Сlick a certain query and select Pages to see the pages that rank for the query.

Google Search Console cannot tell you what you should do to optimize the page and win better positions, but a tool like WebSite Auditor can. Go to Content Analysis > Content Editor > Optimize existing page content, enter the URL of the page you’re working on and its main keyword. The tool will analyze your page against the pages of your top competitors and suggest some ways of improving your content (optimizing content length, adding more keywords, rephrasing your headings, and creating attractive snippets).

Download WebSite AuditorThe tool lets you edit the content of your page right in browser mode, with further possibility to download the edited HTML file and add it to your website.

You can use Search Console to figure out if some of your snippets have lower CTR than is normal for their position.

The thing is that each position in search results averages a certain percentage of clicks. For example, if you rank in position one, then your click-through rate is likely to be around 30%. If it’s significantly below 30%, then there might be something wrong with your snippet, and it needs urgent optimization.

First, go to advancedwebranking.com to see the CTR benchmarks for your type of SERP. Say, if there is an ad or a Google featured snippet at the top of the search results page, then they will obviously steal some of your clicks. So the first thing to do is check the CTR benchmarks for the SERPs you actually compete in:

Now let’s switch to GSC. Go to Performance > Search results, enable Average CTR, Average position, and Total impressions. Filter impressions to eliminate low-quantity keywords, and sort the table by Position in ascending order:

Scroll the table, paying attention to the CTRs that seem too low for their positions, and investigate the reason. Turn to Google and type the underperforming query. Low click-through-rate may be the result of many factors: SERP may contain a lot of ads or featured snippets that take most of the users’ attention and clicks, or maybe your snippet is not as catchy as those of your competitors. Try using structured data to turn a regular snippet into a rich one, or optimize your title and description.

It’s natural for a page to lose traffic as time goes by and it becomes outdated. To find out which pages need an update, turn to Search Console’s Search results, click Date > Compare > Compare last 6 months to previous period.

Enable Clicks, and switch to Pages to see what pages experienced the greatest traffic loss over the last six months.

The traffic loss may be a sign of the need for updates. Look through the page and check if the information is still up-to-date, compare it to the competitors’ pages on the same topic, check if there are enough keywords, or maybe titles and headings require optimization.

Note: it’s important to see the difference between the topics that do need updates and those that have simply lost their popularity. Check the topic of the page in Google Trends to see if it’s worth your further attention.

Covid-19 brought tourism to the brink of survival, so it’s natural that cheap flights query is not that popular anymore.

Backlinks do matter for SEO — they are up there with the most important ranking factors. To check how many backlinks you have, and what websites link to you, click Links on the left side menu of Search Console. Then click more on the Top linked pages table in the External links section. Sort Linking sites, and click on a line to see what domains link to your page.

The truth is that the backlink report of Google Search Console has too little information so you might be left asking yourself how to check backlink quality. There are no insights on when the backlink was created, what text is used for anchors, and whether the backlink is dofollow or not, so the information from Search Console may hardly be used in optimizing a backlink profile.

If you want to get a high-quality link audit, you may use a tool like SEO SpyGlass. The tool collects the data with its own backlink API, but connecting your Google Search Console account to SEO SpyGlass will give you a more comprehensive picture of what your backlink profile is.

Go to Backlink Profile and navigate through sections to get a good understanding of the quality of backlinks. See domain penalty risk to learn which backlinks should be disavowed. Visit other tabs to diversify anchor texts, track referral traffic, and receive other insights.

Download SEO SpyGlassThe tool also lets you view a backlink profile of your competitors and compare it to that of yours. This allows you to find overlooked backlink opportunities and start an outreach campaign. Go to Domain Comparison > enter the domains of your competitors > click Link Intersection to see what domains link to your competitors but not yet to you.

Download SEO SpyGlassInternal links are also a ranking factor, although a less powerful one. To see which pages lack internal links, and to choose which pages require your attention first, go to Top linked pages on the Internal links report. Sort the links in ascending order.

Note: not all pages with internal links are worth additional link juice. Some are outdated, others are simply not important. Use your own judgment to decide which pages are unfairly underlinked.

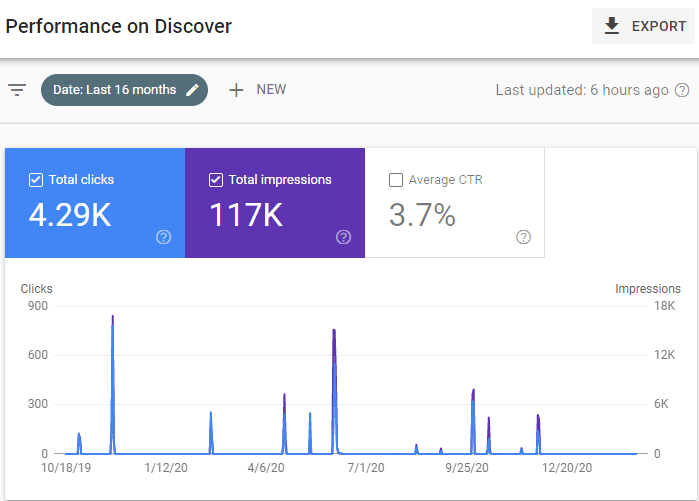

Google Discover is an emerging source of organic traffic, and SEOs are dying to take advantage of it. One oddity of Google Discover is that its traffic cannot be tracked with Google Analytics. So, if you want to check which of your pages have made it to Google Discover, you have to use Google Search Console.

Go to Performance > Discover to view your Discover report:

What you are likely to see on the Discover report is a few spikes here and there. That's because Discover tends to feature fresh articles on trendy topics, and those features are quite short-lived — most pages stay up for just one to three days. What you can do is check which of your topics got featured, spot a pattern, and add more of these topics to your content plan.

Keep in mind that Discover is bonus traffic and it favors very specific types of content. There is no point in obsessing over it and redesigning your entire content strategy — you might sacrifice your main sources of traffic and end up at a net loss.

Google issues a manual action against a website in case a human reviewer determines that a page or a website is not compliant with Google's webmaster quality guidelines. The reasons for manual penalties may be different: your website contains spam, follows Black Hat SEO practices, the website has unnatural backlinks, the content is thin, a page was cloaked, and so on. See the full list of malicious actions in Google’s documents.

When your website receives manual penalties, some or all of your pages will be deindexed in search. If you experience a sudden traffic loss, the first thing to check is the Manual actions report in Search Console. Normally, it will look like this:

But it may also look like this, which means that you need to take actions urgently:

To investigate the issue, click on the line to see the details and take action. Click Learn more to look at suggested steps to fix the problem:

Note: to deal with the issue, you have to fix all the affected pages. Partial fixing will not solve the problem.

Once you’ve fixed all the issues, click Request review, and describe what has been done.

A good request:

explains the exact quality issue on your website

describes the steps you’ve taken to fix the issue

documents the outcome of what you’ve done

Google will send you a notification when your request has been received and accepted or rejected. Don’t send another request until the previous one is answered — this will not speed up the review process.

As to security issues, they arise when your website is hacked, or could potentially harm a user by phishing attacks or installing malware on a user’s computer.

The pages with security issues may appear in search with a warning label.

The algorithm is the same as for manual action issues. Go to the Security issues report of Search Console, investigate the details of the issue, fix all of them, and request a review.

A sitemap is a document placed on your website to help Google navigate through the structure of your website. It tells Google where your pages are located and how often they should be crawled. Sitemaps speed up the indexing process as they make it easier for Google to find the pages.

To add a sitemap (or several) to your website with Google Search Console, go to Sitemaps > enter a sitemap URL > click Submit.

A sitemap file:

must not be larger than 50 MB

must not contain more than 50,000 URLs

If your website has more than 50,000 URLs, you need to add several sitemap files. In this case, each sitemap file has to be listed in a sitemap index file, which may not contain more than 50,000 sitemap files, and must not be larger than 50 MB. You can add several sitemap index files as well.

If there’s no sitemap provided for your website, Google will crawl your pages on its own, and check your pages for updates as often as it decides.

Note: a sitemap is not a guarantee that Google will crawl your website accordingly. A sitemap is just a recommendation for Google, which may or may not be taken into account.

Forced indexing will make your page appear in search results faster than if you’d just wait for Googlebot to find the page on its own. So your SEO strategy will start bringing use faster, too.

Once you update or add a page, you can ask Google to index it via Search Console. Enter the URL into the search field of Google Search Console and click Request indexing.

Voila! Your page is now indexed. Although it may actually take anywhere between a few minutes and a few days. Still, probably faster than the organic indexing.

Over the past few years, Google Search Console has added quite a few useful features and has grown into a fairly useful website audit tool. It still has ways to go and falls short of commercially available SEO tools in terms of data and convenience. But, if you are on a budget, enough search optimization can be done using Search Console alone.