Fix all technical issues on your site

(with the help of WebSite Auditor)

Do away with tech problems that are holding your rankings back

It's important that you spot and eliminate technical problems on your site before they grow into an SEO problem. Otherwise, apart from creating a not-so-smooth user experience for your visitors, you also risk losing your search engine rankings — or even not getting your whole site crawled and indexed by search engines at all!

That is why before you start optimizing your pages for target keywords, you need to run a comprehensive site audit to identify and fix issues that can cost you search engine ranks.

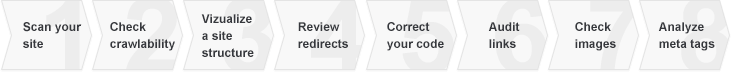

Step 1: Run a site scan

First things first, you need to run a comprehensive site scan to collect all of your site's pages and resources (CSS, images, videos, JavaScript, PDFs, etc.) so that you can later audit and analyze your entire website.

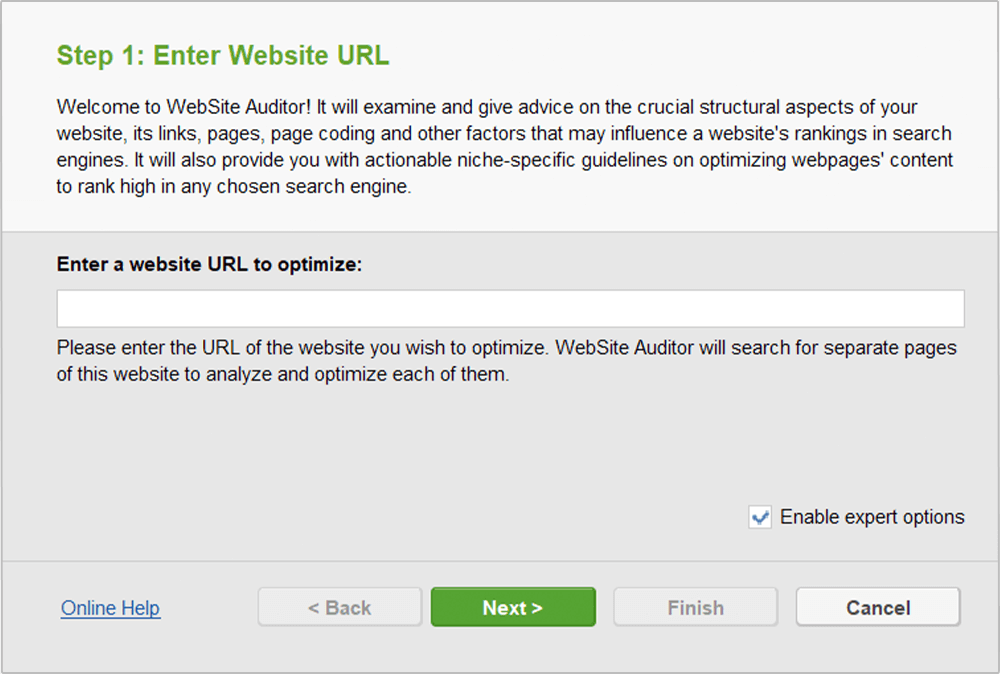

How-to: Collect your site's pages. Run WebSite Auditor and enter your website's URL to start the scan.

Depending on how large the site is, you may have to hang on for a couple of minutes until all pages have been scanned.

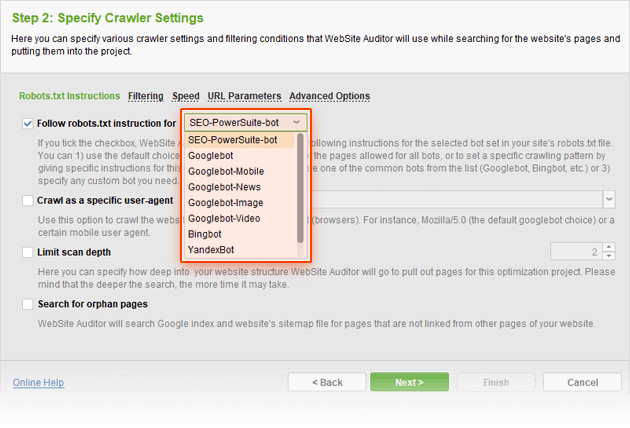

Tip: Crawl your site as Googlebot (or any other bot). By default, WebSite Auditor crawls your site using a spider called SEO-PowerSuite-bot, which means it will obey robots instructions for all bots (user agent: *). You may want to tweak this setting to crawl the site as Google, Bing, Yahoo, etc. – or discard robots instructions altogether and collect all pages of your site, even the ones disallowed in your robots.txt.

To do this, create a WebSite Auditor project (or rebuild an existing one). At Step 1, enter your site's URL and check the Enable expert options box. At Step 2, click on the drop-down menu next to the Follow robots.txt instructions option. Select the bot you'd like to crawl your site as; if you'd like to discard robots.txt during the crawl, simply uncheck the Follow robots.txt instructions box. Finally, hit Next to proceed with the crawling.

Step 2: Check crawlability and indexing issues

It's crucial that users and search engines can easily reach all the important pages and resources on your site, including JavaScript and CSS. If your site is hard to crawl and index, you're probably missing out on lots of ranking opportunities; on the other hand, you may well be willing to hide certain parts of your site from search engines (say, pages with duplicate content).

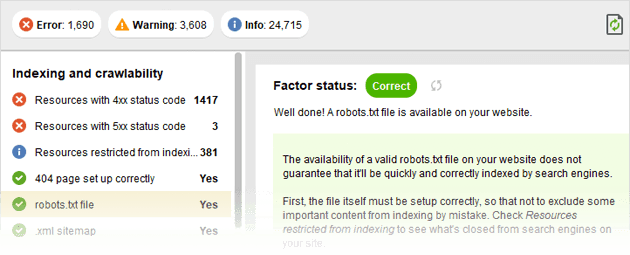

The main aspects to pay attention to are proper indexing instructions in your robots.txt file and proper HTTTP response codes.

How-to: 1. Check if your robots.txt file is in place. If you're not sure whether you have a robots.txt file or not, check the status of the Robots.txt factor in Site Audit.

2. Make sure none of your important pages are blocked from indexing

If your content cannot be accessed by search engines, it will not appear in search results, so you need to check the list of pages that are currently blocked from indexing, and make sure no important content got blocked by occasion.

Switch to the Resources restricted from indexing section in Site Audit to revise which of your site's pages and resources are blocked by:

- the robots.txt file itself

- the "noindex" tag in the <head> section of pages

- the X-Robots-Tag in the HTTP header

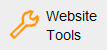

3. Revise your robots.txt file (or create it from scratch)

Now, if you need to create a robots.txt file, or fix its instructions, simply switch to the Pages module, click

and choose

and choose

. In the menu that pops up, you can either fetch your robots.txt from server to revise it, or create a robots.txt file from scratch and upload it to your website.

. In the menu that pops up, you can either fetch your robots.txt from server to revise it, or create a robots.txt file from scratch and upload it to your website.

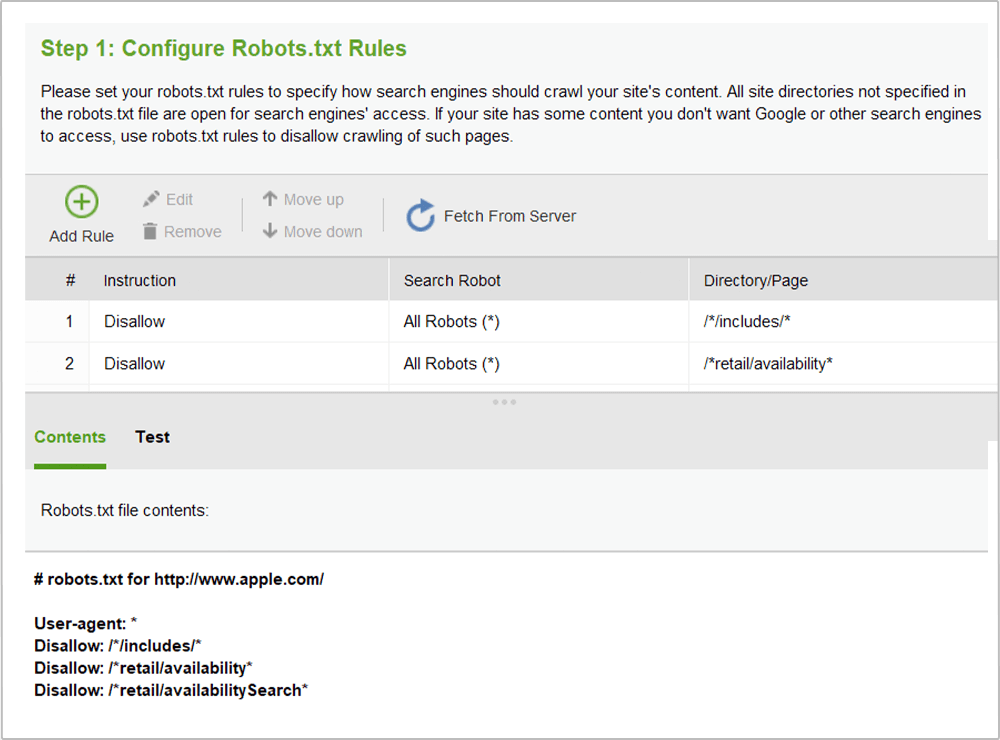

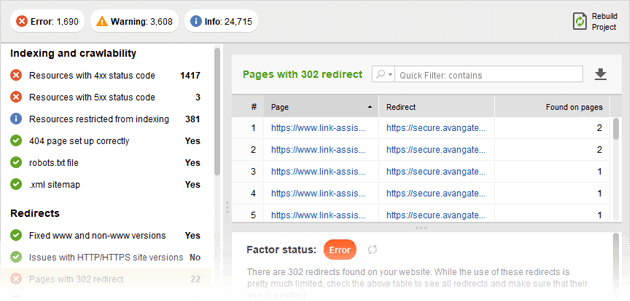

4. Take care of your pages' response codes. Indexing issues can be also caused by HTTP response codes errors. Under Indexing and crawlability in the Site Audit module, go through Resources with 4xx status code, Resources with 5xx status code, and 404 page set up correctly. If any of the factors have an error or warning status, study the Details section to see problem pages and get how-to's on fixing them.

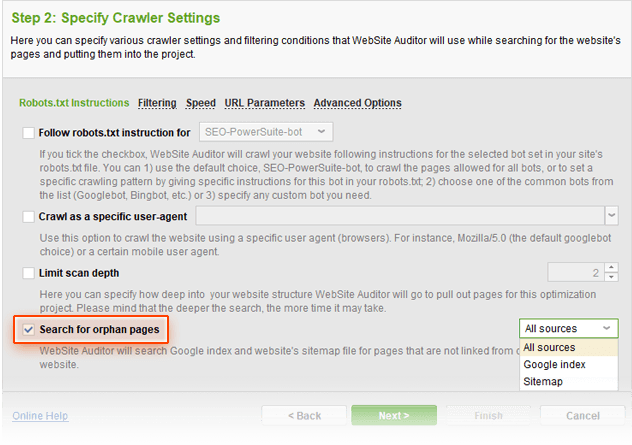

Tip: Look for orphan pages (pages that are not linked to internally). WebSite Auditor can help you find the pages on your site that aren't linked to internally, and thus impossible to find for both users and search engines.

To find orphan pages on your site, you'll need to rebuild your WebSite Auditor project. To do this, go to the Pages module and click

![]() .

.

At Step 1 of the rebuild, check the Enable expert options box. At Step 2, select Look for orphan pages, and proceed with the next steps like normal. Once the crawl is complete, you'll be able to find orphan pages in the Pages module, marked with the Orphan page tag.

Step 3: Visualize your site structure

Unnecessary redirects and broken links can be a warning sign to search engines; orphan pages and isolated parts of your site can hide good stuff from your visitors. In many cases, the most efficient way to instantly spot such problems in your site architecture is to visualize the site's structure.

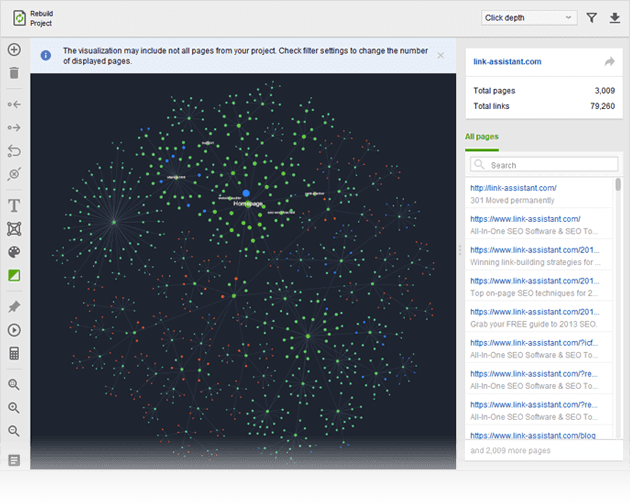

How-to: 1. Visualize an overall structure of your site. Go to Site Structure > Visualization. In your workspace, you will see a graphical map of your pages and relations between them. Blue nodes are redirects. Red nodes are broken links. Isolated nodes are orphan pages.

Tips:

1. By default, the tool shows 1,000 pages (which can be adjusted up to 10,000 pages) that are arranged by Click Depth. If you have a large website, it is better to visualize it part by part (e.g., main categories, a blog, etc.).

2. All the nodes' connections are shown as arrows (either one-way or two-way) that represent the exact state of relations between pages. It is also possible to drag any node to any place on the graph (to visualize a better picture) as well as zoom in and out.

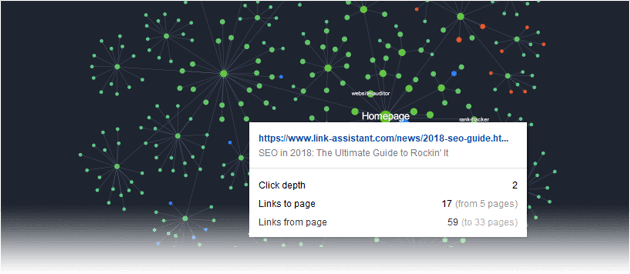

3. You can hover on any node to reveal additional information:

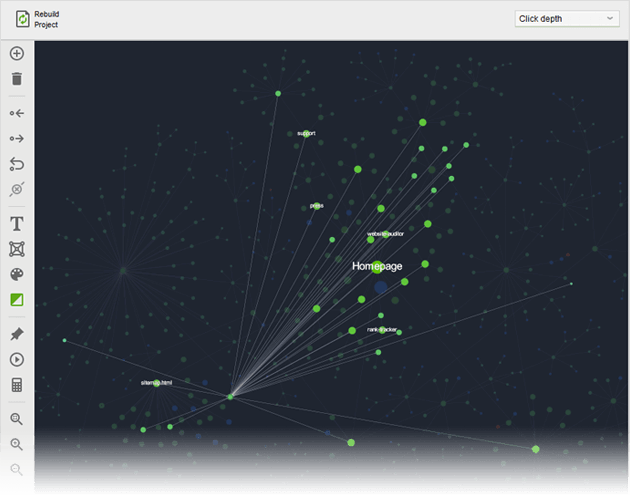

2. Click on any node to reveal only its connections:

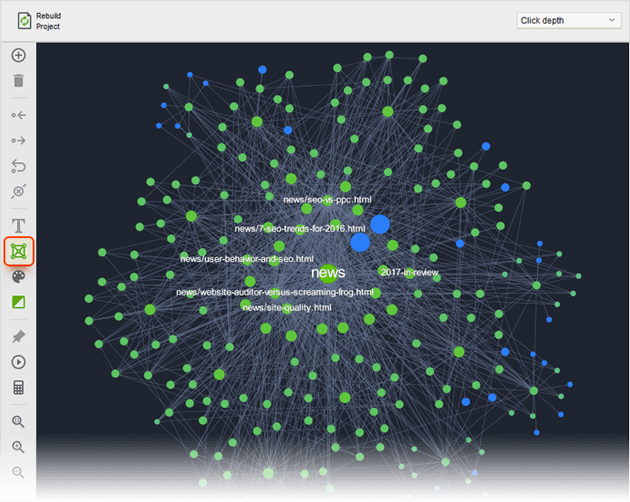

Or click on the Display all pages' connections button to see all the connections, not only the shortest ones:

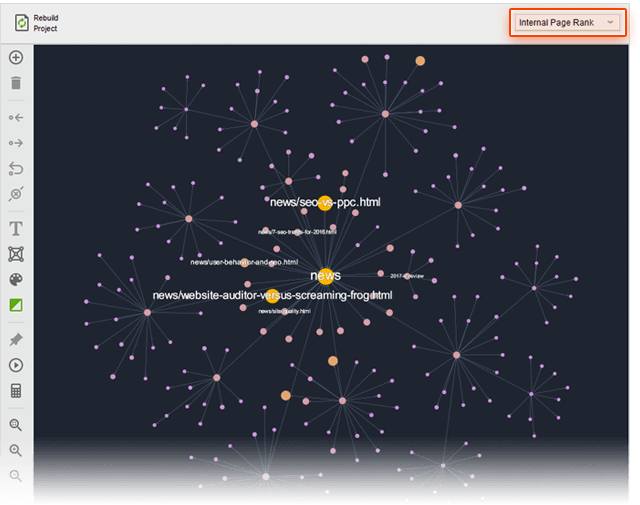

3. Visualize your site structure by Internal Page Rank. Choose Internal Page Rank in a drop-down menu on the top right. Here the size of nodes reflects the value of Internal Page Rank — the importance and authority of a page within your site. The bigger the node, the higher the value.

By looking at this graph, you can understand whether your main pages have that level of internal authority that they are supposed to have: homepage should have the highest Page Rank, main categories should have a higher value than their subcategories, etc.

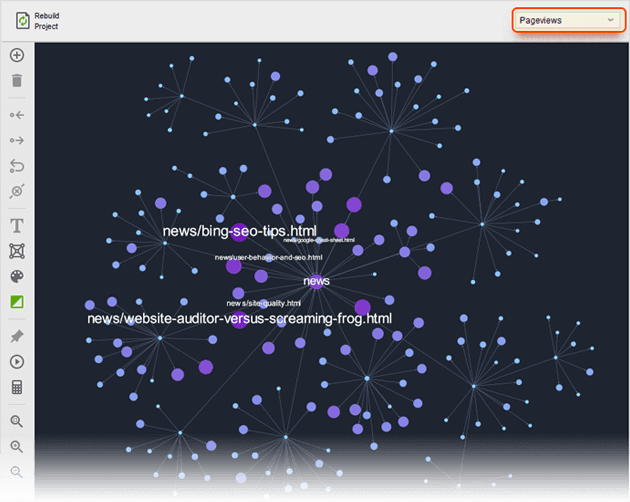

4. Visualize your site structure by Pageviews. Connect your Google Analytics account. You can do it in Preferences > Google Analytics Account. Update your data in Site Structure > Pages and then in the Visualization dashboard, rebuild your Pageviews graph. Here the size of nodes reflects the value of Pageviews. The bigger the node, the higher the value.

By looking at this graph, you can see the traffic flow to certain pages. Find your conversion pages on the graph and check whether your main traffic pages point to them.

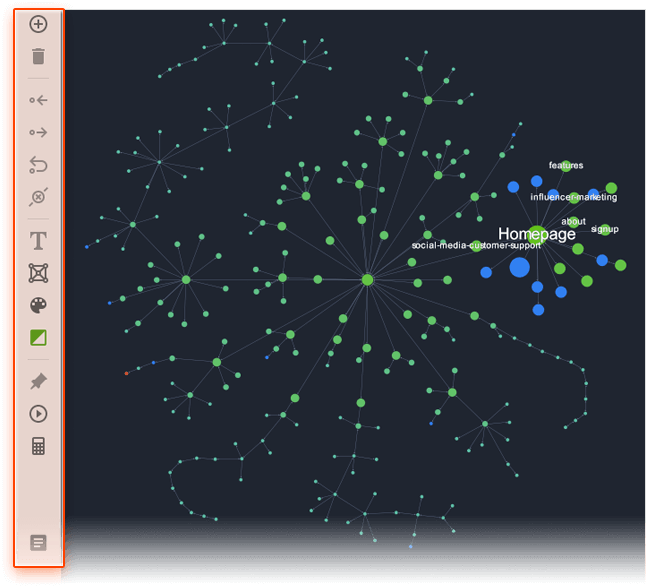

5. By using tools on the left panel, you can edit your graph to make your site structure perfect. Click on any node to enter the editing mode. Use the action buttons on the left to add, remove, and create different kinds of links. Customize your graph by marking and pinning the nodes and coloring your graph by tags.

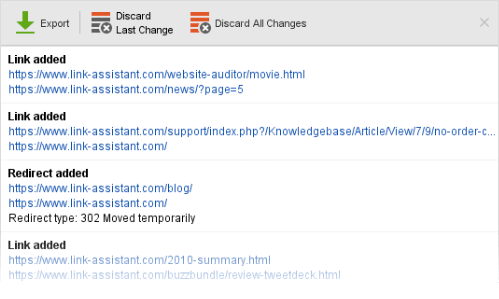

When all changes are done, you can click

![]() button at the bottom of your action panel and export them as a to-do list, or export a final list of changes in CSV:

button at the bottom of your action panel and export them as a to-do list, or export a final list of changes in CSV:

Plus, you can save a graphical map of your site in PDF.

Step 4: Fix redirects

Redirects are crucial for getting visitors to the right page if it has moved to a different URL, but if implemented poorly, redirect can become an SEO problem.

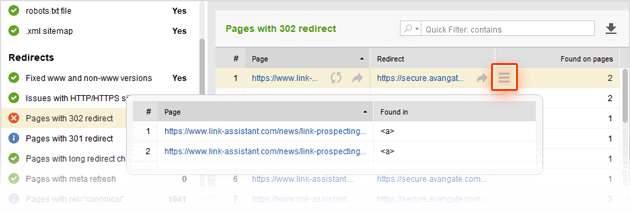

How-to: 1. Check pages with 302 redirects and meta refresh. Under Redirects in Site Audit, see if you have any 302 redirects or pages with meta refresh.

A 302 is a temporary redirect, and though it's a legitimate way to redirect your pages in certain occasions, it may not transfer link juice from the redirected URL to the destination URL.

A meta refresh is often used by spammers to redirect visitor to pages with unrelated content, and search engines generally frown upon the use of meta refresh redirects.

Using any of these methods is not recommended and can prevent the destination page from ranking well in search engines. So unless the redirect really is temporary, try to set up permanent 301 redirects instead.

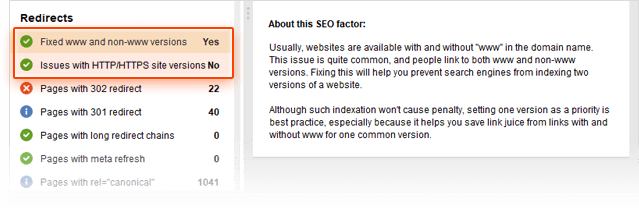

2. Make sure the HTTP/HTTPS and www/non-www versions of your site are redirecting correctly. If your site is available both with the www part in its URL and without it (and it should be), or if you have both an HTTP and an HTTPS version of the site, it's important that these redirect correctly to the primary version.

To make sure HTTP/HTTPS and www/non-www versions of your site are set up correctly, take a look at those factors in the Site Audit module, under the Redirects section. If any problems are found, you'll get detailed how-to-fix advice in the right-hand part of your screen.

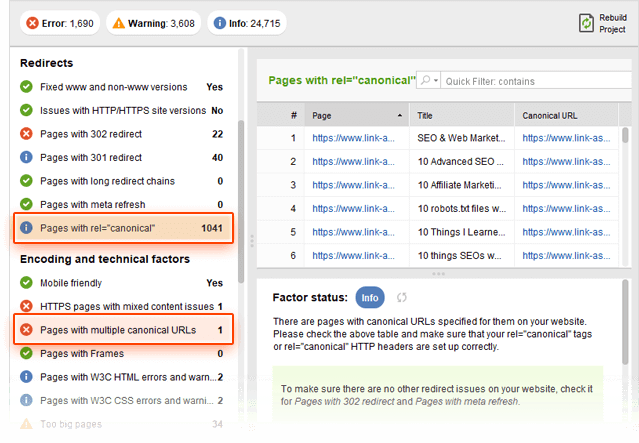

3. Check for issues with rel=”canonical”. Canonicalization is the process of picking the best URL when there are several pages on your site with identical or similar content. It's a good idea to specify canonical URLs for pages on which content duplication occurs, so that search engines know which of the pages is more authoritative and should be ranked in search results. Canonical URLs can be specified in either a <link rel="canonical"> element in the page's HTML or a canonical link in your HTTP header.

To see which pages of your site have a canonical URL set up, and what that URL is, click on Pages with rel="canonical" under the Redirects section of your Site Audit dashboard. On the right, you'll see the pages' titles and canonical URLs.

Instances of more than one canonical URL on a single page can happen with some content management systems, when the CMS automatically adds a canonical tag to site's pages. Multiple canonical URLs will confuse search engines and likely cause them to ignore them altogether. Check if there are such instances on your site under the Encoding and technical factors section of your site audit, by clicking on Pages with multiple canonical URLs.

Step 5: Brush up the code

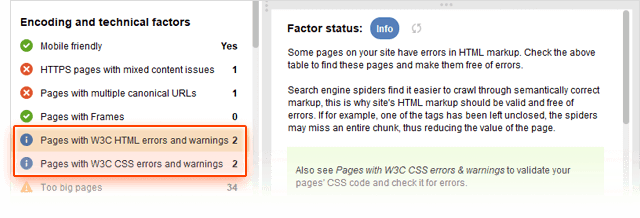

Coding issues can be an SEO and user experience disaster, affecting your pages' load speed, the way they are displayed in different browsers, and their crawlability for search engine bots. So the next step of your site's audit is to make sure your pages code is free from errors, is perfectly readable to search engines (not hiding your content with frames) and is not too "heavy", skyrocketing your page load time.

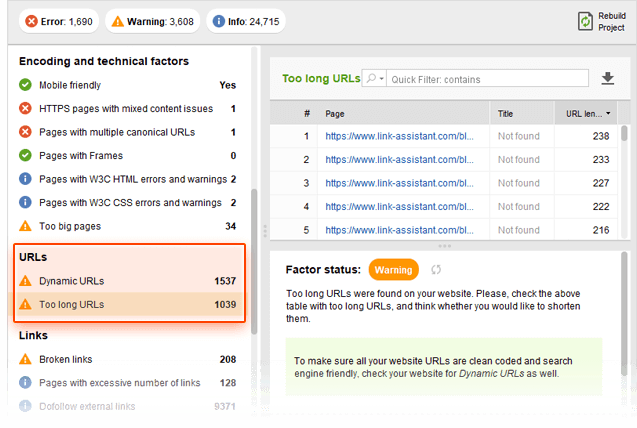

How-to: Make sure no pages use Frames, contain W3C errors and warnings, or are too big. You'll find this data under Encoding and technical factors in Site Audit.

For any factors with an Info, Warning, or Error status, go to Details to explore the problem pages and see recommendations on how to get the issue fixed.

Tips: 1. See if your site is mobile-friendly. The Mobile-friendly factor shows whether or not the site's homepage passes Google's mobile firendliness test. Mind that failing that test can cost you losing positions in Mobile search — and that may mean a sharp decrease in traffic.

2. Look out for unreadable URLs. Check with the URLs section in the Site Audit module and make sure you fix URLs that are too long and not user-friendly. As for dynamic URLs, make sure you only use those when necessary: dynamically generated URLs are hard to read and not descriptive. Though unlikely, several versions of the same URL with different parameters might also cause duplication issues if search engines find them.

Step 6: Check for linking problems

Internal linking problems not only negatively affect your visitors' user experience, but also confuse search engines as they crawl your site. Outgoing external links should also be paid attention, as pages with too many links may be considered spammy by search engines.

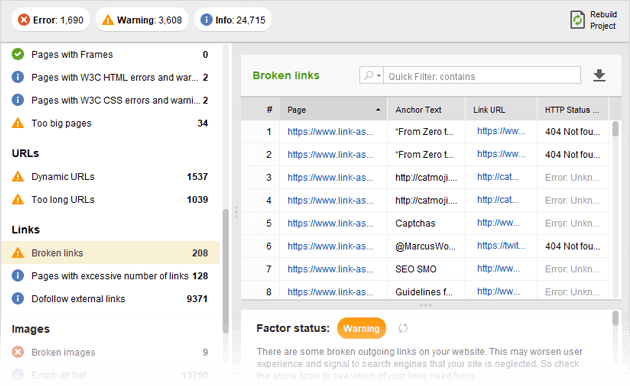

How-to: 1. Find all broken links. Broken links are links that point to non-existent URLs – these can be old pages that are no longer there or simply misspelled versions of your URLs. It is critical that you check your site for broken linking to ensure search engines and visitors never hit a dead end while navigating through your site.

To get a list of all broken links on the website you're auditing, click on Broken links under the Links section (still in the Site Audit module). Here, you'll see the pages where broken links are found (if any), the URL of the broken link, and its anchor text.

2. Find pages with too many outgoing links. Too many links coming from a single page can be overwhelming to visitors and a spam signal for search engines. As a rule of thumb, you should try to keep the number of links on any page under 100.

To get a list of pages with too many outgoing links, go click on Pages with excessive number of links under the Links section in your site audit. Here, you'll see the pages that have over 100 outgoing links (both internal and external).

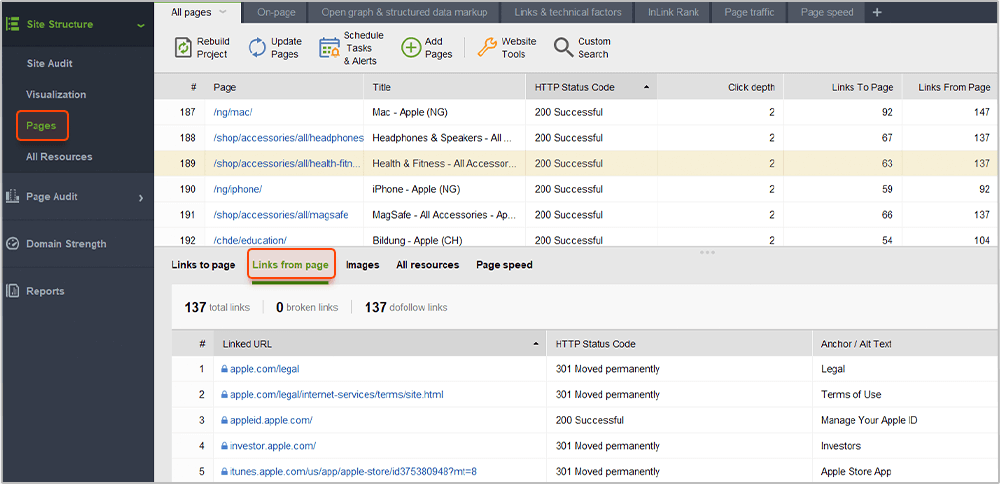

3. Identify links that are being redirected. Redirected links can pose a problem as they will typically cause the destination page longer to load and waste your search engine crawl budget on pages with a redirected status code. Look out for these links and fix them by changing the links so that they point to the destination page right away.

In the Site Audit module, look for Pages with 302 redirect and Pages with 301 redirect under the Redirects section. If any such pages are found, you'll see a list of them on the right, along with the URL they redirect to and the number of internal links pointing to them. Click on the three-line button next to the number of links to the page for a full list of pages that link to it.

Tip: Get details on any internal/external link. For detailed info on any link (anchor/alt text, directives, etc.), switch to the Pages module in WebSite Auditor and click on one of the pages in the table. Below, click on Links from page to see every link on the page along with its HTTP response code, anchor text, and robots directives (nofollow/dofollow).

Step 7: Audit your images

Issues with images on your site can not only negatively affect your visitors' user experience, but also confuse search engines as they crawl your site. Search engines can't read the content in an image, so it's critical that you provide them with a brief description of what the image is about in the alternative text.

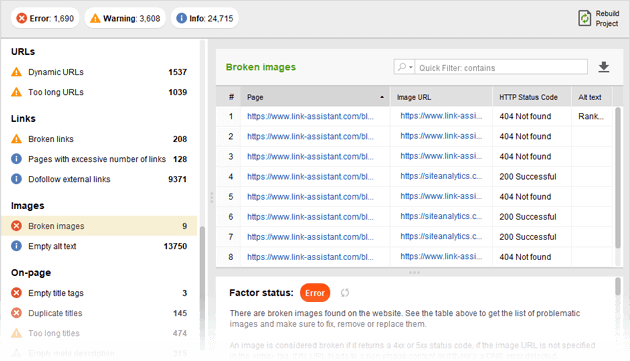

1. Find all broken images. Broken images are images that cannot be displayed – this can happen when image files have been deleted or the path to the file has been misspelled.

To get a list of problematic images on your site, check with the Broken images factor in the Images section (under the Site Audit module).

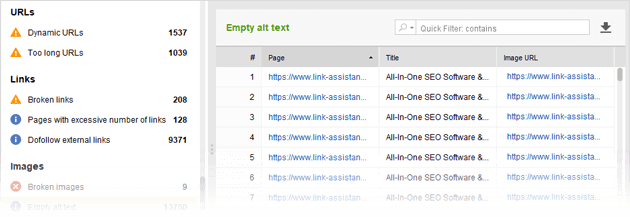

2. Spot images with empty alt text. When an image's alt text is missing, search engines won't be able to figure out what the image is about and how it contributes to the page's content. That's why it's important that you use unique alternative text for your images; it's also a good idea to optimize it for your target keywords as long as they fit naturally in the description.

To get a list of images with no alternative text, click on Empty alt text in the Images section (under the Site Audit module).

Step 8: Review your titles and meta descriptions

Through your title and meta description tags you can inform search engines what your pages are about. A relevant title and description can help your rankings; additionally, the contents of these tags will be used in your listing's snippet in the search results.

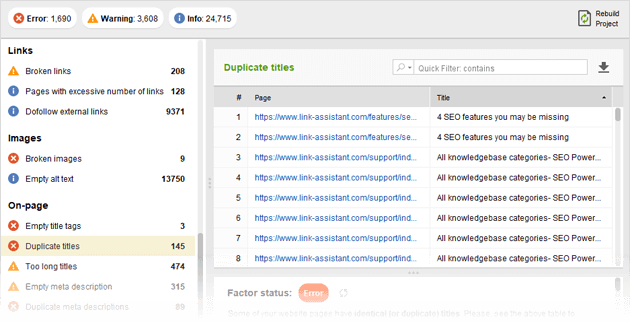

How-to: Avoid empty, too long, or duplicate titles and meta descriptions. Check the On-page section of the Site Audit module to see problem pages, if any, and get info and tips.

Duplicate titles and descriptions can confuse search engines as to which page should be ranked in search results; consequently, they are likely to rank none. If your titles or meta descriptions are empty, search engines will put up a snippet of the page themselves; more likely than not, it will not look appealing to searchers. Lastly, too long titles and descriptions will get truncated for your SERP snippet, and not get your message across.

Step 9: Check PageSpeed and user experience

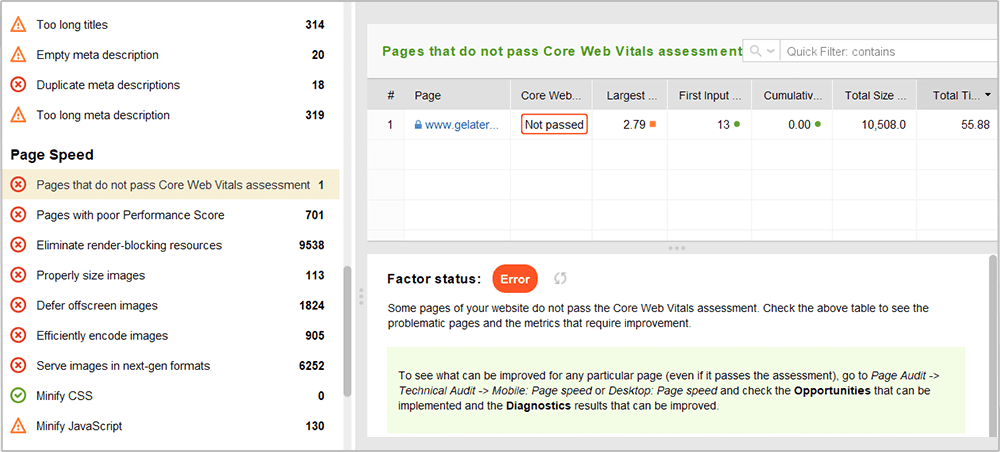

As Google has made Core Web Vitals a ranking factor, you have to make sure nothing prevents users and Google from accessing your pages and interacting with content. Bad user experience (i.e. slow pages, excessive pop-ups, too big images or/and videos, etc.) will affect your pages' positions in search.

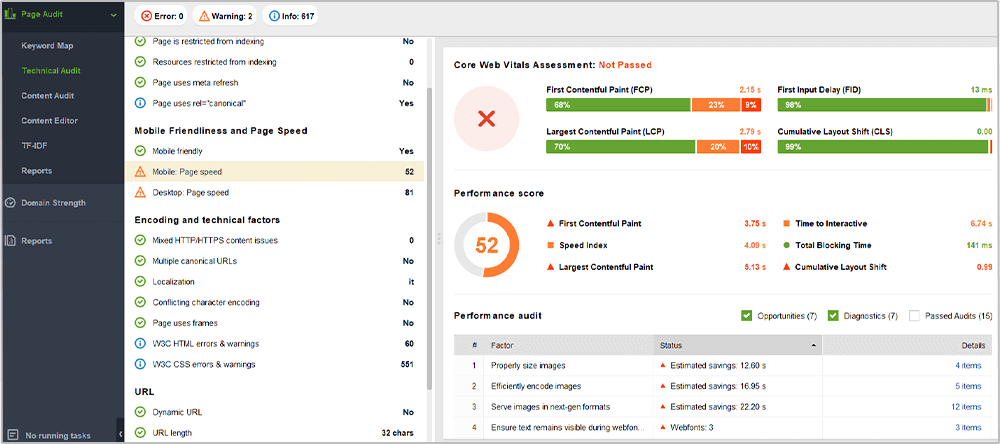

How-to: Check what pages don't pass Core Web Vitals assessment. Check the Page Speed > Pages that do not pass Core Web Vitals assessment section of the Site Audit module to see the pages that require improvements.

Tip: To get more details on the page's Core Web Vitals and see the hints on what to improve on an exact page, go to Page Audit > Technical Audit > Mobile Friendliness and Page Speed. Check both Mobile and Desktop sections to see how to make both versions' performance better.

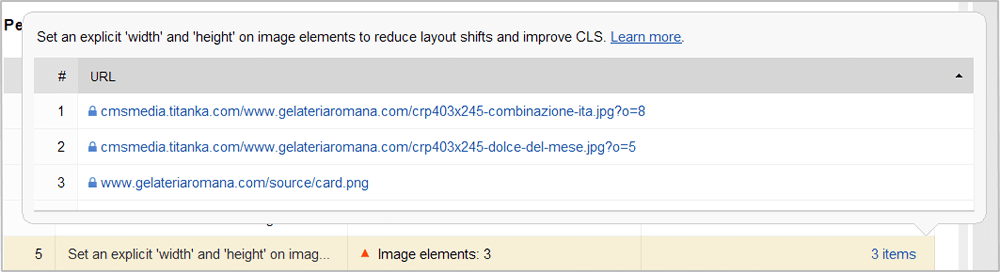

To see the exact page items that prevent the page from good CVW parameters, expand the list of items in the Details column of the Performance audit table.

You're done with the tech part!

Congrats — you've fixed all technical issues that may have been holding your rankings back (and that in itself puts you ahead of so many competitors already!). It's time you moved on to the (more) creative part, namely creating and optimizing your landing pages' content.

Create a winning SEO campaign with SEO PowerSuite:

- Find your target keywords

- Check current visibility in search engines

- Detect on-site issues and fix them

- Optimize your pages' content

- Get rid of harmful links

- Build new quality backlinks