- How to Install SEO PowerSuite

- How to Register SEO PowerSuite

Rank Tracker User Manual

Rank Tracker User Manual- Getting Started

- Domain Analysis

- Keyword Research

- Competitor Research

- SERP Analysis

- Updating Keyword Data

- Checking Rankings

- Keyword Map

- Checking Traffic

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Storing Projects Online

- Search Safety Settings

- Reporting Errors

- Using Tags

- Miscellaneous Settings

WebSite Auditor User Manual

WebSite Auditor User Manual- Application Layout

- Project Setup

- Site Optimization

- Page Optimization

- Page Speed Analysis (Core Web Vitals)

- Sitemap XML

- Robots.txt Settings

- Custom Search

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Crawling Slower Sites

- Reporting Errors

- Storing Projects Online

- Miscellaneous Settings

SEO SpyGlass User Manual

SEO SpyGlass User Manual- Application Layout

- Project Setup

- Backlink Profile Details

- Verifying Backlink Presence

- Checking Backlink Characteristics

- Analyzing Backlink Quality

- Analyzing Penalty Risk

- Disavow Backlinks

- Historical Data

- Domain Comparison & Competitive Analysis

- Bulk Analysis

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

LinkAssistant User Manual

LinkAssistant User Manual- Application Layout

- Creating a Project and Searching for Prospects

- Managing Search Results

- Analyzing Prospects

- Reaching out to Prospects

- Prospect/Backlink Management

- Link Exchange

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

- Knowledgebase & Troubleshooting

- Installation & Compatibility

- Common Features & Settings

- What is Safe Query Mode and how does it work?

- Why do I see CAPTCHAs while running checks in SEO PowerSuite?

- Why SEO PowerSuite apps are running out of memory?

- Will SEO PowerSuite tools work without Search Algo Updates?

- Can I use anti-CAPTCHA keys in SEO PowerSuite?

- How do I customize my workspaces in SEO PowerSuite apps?

- How do I change the interface language?

- How do I stop getting E-mail Alerts?

- How do I re-run failed tasks in SEO PowerSuite?

- What is InLink Rank and how's it updated?

- How do I check Page Authority and Domain Authority using a MOZ API key?

- How do I request a new Search Engine?

- Rank Tracker

- How do I avoid temp blocks and ensure smooth rank tracking?

- Why rankings are different in Rank Tracker and in a browser?

- What is Visibility in Rank Tracker and how it's being calculated?

- How do I export my rank history from Rank Tracker to Excel?

- How do I import rank history from other tools to Rank Tracker?

- I can't get Number of Searches in Rank Tracker

- Why number of Sessions is lower than Expected Visits?

- Why is my country not on the list of supported regions for Rankings Keywords/Keyword Gap and Competitor Research?

- WebSite Auditor

- What do I do if WebSite Auditor freezes/runs out of memory?

- Why some of my pages are not being found by WebSite Auditor?

- How do I change my target keywords for an analyzed page in Page Audit?

- The list of competitors in Page Audit looks wrong/irrelevant

- How do I import a CSV to Keyword Map?

- Using Custom Search: CSS Selectors

- Should I care about HTML/CSS errors?

- SEO SpyGlass

- LinkAssistant

- Adding custom Meta Description for your Link Directory

- Are Nofollow links useful?

- What is Backlink Value and how do I check it?

- How do I import Link Prospects from SEO SpyGlass to LinkAssistant?

- LinkAssistant doesn't find backlinks to my site/doesn't let me add Link Prospects manually

- What's the difference between (Backlink) and (Prospect) factors in LinkAssistant?

- How come LinkAssistant cannot find any Prospects?

- Why is the Backlink Page column empty?

- SEO Reporting

- FAQ & Troubleshooting

- How do I get an invoice for my order?

- How do I change my CC for the subscription?

- Why do I see VAT/Tax on the Checkout?

- I've lost my license keys, what do I do?

- Can I get Customer Support?

- Can I get remote assistance?

- Software auto-update won't complete (or gets stuck in a loop)

- Java Virtual Machine Launcher: Could not create Java Virtual Machine

- I haven't received any order confirmation/license details

- How do I connect my email account to SEO PowerSuite?

- I don't get any data from my Google accounts (Search Console, Analytics, Google Ads)

- How do I report an issue in SEO PowerSuite?

- How do I get a PageSpeed Insights key?

- How to Install SEO PowerSuite

- How to Register SEO PowerSuite

Rank Tracker User Manual

Rank Tracker User Manual- Getting Started

- Domain Analysis

- Keyword Research

- Competitor Research

- SERP Analysis

- Updating Keyword Data

- Checking Rankings

- Keyword Map

- Checking Traffic

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Storing Projects Online

- Search Safety Settings

- Reporting Errors

- Using Tags

- Miscellaneous Settings

WebSite Auditor User Manual

WebSite Auditor User Manual- Application Layout

- Project Setup

- Site Optimization

- Page Optimization

- Page Speed Analysis (Core Web Vitals)

- Sitemap XML

- Robots.txt Settings

- Custom Search

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Crawling Slower Sites

- Reporting Errors

- Storing Projects Online

- Miscellaneous Settings

SEO SpyGlass User Manual

SEO SpyGlass User Manual- Application Layout

- Project Setup

- Backlink Profile Details

- Verifying Backlink Presence

- Checking Backlink Characteristics

- Analyzing Backlink Quality

- Analyzing Penalty Risk

- Disavow Backlinks

- Historical Data

- Domain Comparison & Competitive Analysis

- Bulk Analysis

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

LinkAssistant User Manual

LinkAssistant User Manual- Application Layout

- Creating a Project and Searching for Prospects

- Managing Search Results

- Analyzing Prospects

- Reaching out to Prospects

- Prospect/Backlink Management

- Link Exchange

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

- Knowledgebase & Troubleshooting

- Installation & Compatibility

- Common Features & Settings

- What is Safe Query Mode and how does it work?

- Why do I see CAPTCHAs while running checks in SEO PowerSuite?

- Why SEO PowerSuite apps are running out of memory?

- Will SEO PowerSuite tools work without Search Algo Updates?

- Can I use anti-CAPTCHA keys in SEO PowerSuite?

- How do I customize my workspaces in SEO PowerSuite apps?

- How do I change the interface language?

- How do I stop getting E-mail Alerts?

- How do I re-run failed tasks in SEO PowerSuite?

- What is InLink Rank and how's it updated?

- How do I check Page Authority and Domain Authority using a MOZ API key?

- How do I request a new Search Engine?

- Rank Tracker

- How do I avoid temp blocks and ensure smooth rank tracking?

- Why rankings are different in Rank Tracker and in a browser?

- What is Visibility in Rank Tracker and how it's being calculated?

- How do I export my rank history from Rank Tracker to Excel?

- How do I import rank history from other tools to Rank Tracker?

- I can't get Number of Searches in Rank Tracker

- Why number of Sessions is lower than Expected Visits?

- Why is my country not on the list of supported regions for Rankings Keywords/Keyword Gap and Competitor Research?

- WebSite Auditor

- What do I do if WebSite Auditor freezes/runs out of memory?

- Why some of my pages are not being found by WebSite Auditor?

- How do I change my target keywords for an analyzed page in Page Audit?

- The list of competitors in Page Audit looks wrong/irrelevant

- How do I import a CSV to Keyword Map?

- Using Custom Search: CSS Selectors

- Should I care about HTML/CSS errors?

- SEO SpyGlass

- LinkAssistant

- Adding custom Meta Description for your Link Directory

- Are Nofollow links useful?

- What is Backlink Value and how do I check it?

- How do I import Link Prospects from SEO SpyGlass to LinkAssistant?

- LinkAssistant doesn't find backlinks to my site/doesn't let me add Link Prospects manually

- What's the difference between (Backlink) and (Prospect) factors in LinkAssistant?

- How come LinkAssistant cannot find any Prospects?

- Why is the Backlink Page column empty?

- SEO Reporting

- FAQ & Troubleshooting

- How do I get an invoice for my order?

- How do I change my CC for the subscription?

- Why do I see VAT/Tax on the Checkout?

- I've lost my license keys, what do I do?

- Can I get Customer Support?

- Can I get remote assistance?

- Software auto-update won't complete (or gets stuck in a loop)

- Java Virtual Machine Launcher: Could not create Java Virtual Machine

- I haven't received any order confirmation/license details

- How do I connect my email account to SEO PowerSuite?

- I don't get any data from my Google accounts (Search Console, Analytics, Google Ads)

- How do I report an issue in SEO PowerSuite?

- How do I get a PageSpeed Insights key?

Crawling Slower Sites

At times, some pages in your project may return status codes like '403 Forbidden', '400 Bad Request' or '500 Internal Server Error'. The pages may be listed under 'Resources with 4xx or 5xx status codes' sections while the URLs are valid and load fine in a browser.

This may happen with huge or slower websites hosted on servers with high security or crawling budget restrictions. To decrease the load and get valid results, you can adjust the crawling settings in WebSite Auditor to limit the number and the speed of the requests sent to a site.

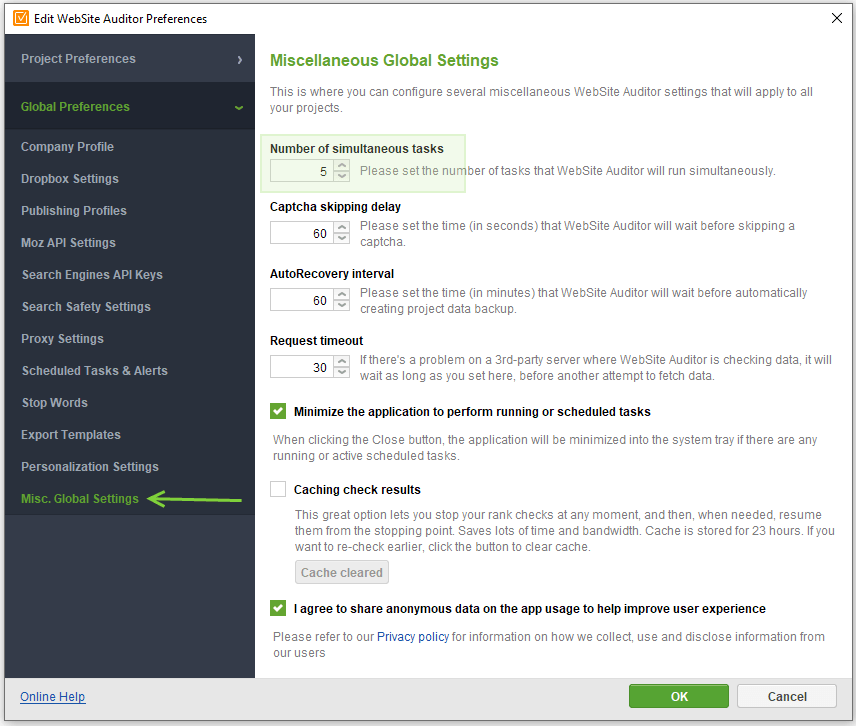

Firstly, limit the number of tasks WebSite Auditor will run simultaneously. Go to Preferences > Misc Global Settings and set 1-2 tasks in the Number of Tasks field.

This way, the load on the server will decrease so your IP shouldn't get compromised, and the pages should start returning actual status codes.

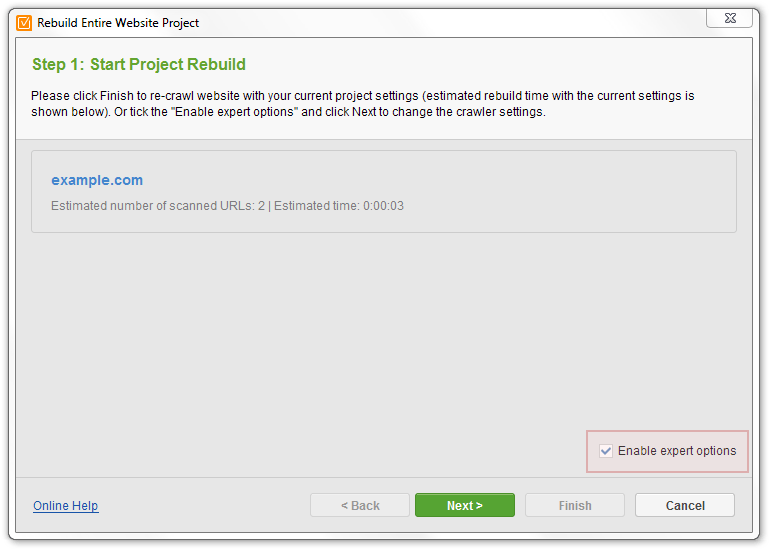

Next, once you create or rebuild a project, enable Expert Options, and click Next.

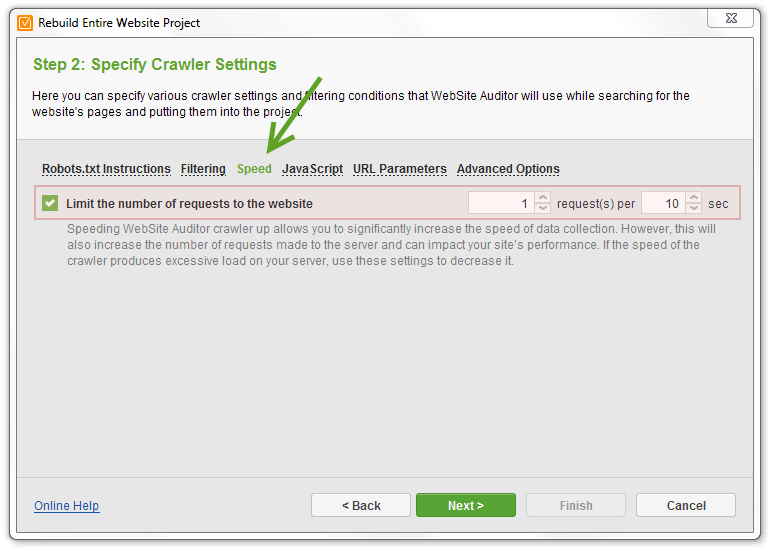

In the Speed section, limit the number of requests to the website to prevent heavy load on the server. We recommend setting up the limit to 1 request per 10 seconds if the server tends to block the requests.

These settings can be accessed or modified any time under Preferences > Crawler Settings before rebuilding an existing project.