- How to Install SEO PowerSuite

- How to Register SEO PowerSuite

Rank Tracker User Manual

Rank Tracker User Manual- Getting Started

- Domain Analysis

- Keyword Research

- Competitor Research

- SERP Analysis

- Updating Keyword Data

- Checking Rankings

- Keyword Map

- Checking Traffic

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Storing Projects Online

- Search Safety Settings

- Reporting Errors

- Using Tags

- Miscellaneous Settings

WebSite Auditor User Manual

WebSite Auditor User Manual- Application Layout

- Project Setup

- Site Optimization

- Page Optimization

- Page Speed Analysis (Core Web Vitals)

- Sitemap XML

- Robots.txt Settings

- Custom Search

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Crawling Slower Sites

- Reporting Errors

- Storing Projects Online

- Miscellaneous Settings

SEO SpyGlass User Manual

SEO SpyGlass User Manual- Application Layout

- Project Setup

- Backlink Profile Details

- Verifying Backlink Presence

- Checking Backlink Characteristics

- Analyzing Backlink Quality

- Analyzing Penalty Risk

- Disavow Backlinks

- Historical Data

- Domain Comparison & Competitive Analysis

- Bulk Analysis

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

LinkAssistant User Manual

LinkAssistant User Manual- Application Layout

- Creating a Project and Searching for Prospects

- Managing Search Results

- Analyzing Prospects

- Reaching out to Prospects

- Prospect/Backlink Management

- Link Exchange

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

- Knowledgebase & Troubleshooting

- Installation & Compatibility

- Common Features & Settings

- What is Safe Query Mode and how does it work?

- Why do I see CAPTCHAs while running checks in SEO PowerSuite?

- Why SEO PowerSuite apps are running out of memory?

- Will SEO PowerSuite tools work without Search Algo Updates?

- Can I use anti-CAPTCHA keys in SEO PowerSuite?

- How do I customize my workspaces in SEO PowerSuite apps?

- How do I change the interface language?

- How do I stop getting E-mail Alerts?

- How do I re-run failed tasks in SEO PowerSuite?

- What is InLink Rank and how's it updated?

- How do I check Page Authority and Domain Authority using a MOZ API key?

- How do I request a new Search Engine?

- Rank Tracker

- How do I avoid temp blocks and ensure smooth rank tracking?

- Why rankings are different in Rank Tracker and in a browser?

- What is Visibility in Rank Tracker and how it's being calculated?

- How do I export my rank history from Rank Tracker to Excel?

- How do I import rank history from other tools to Rank Tracker?

- I can't get Number of Searches in Rank Tracker

- Why number of Sessions is lower than Expected Visits?

- Why is my country not on the list of supported regions for Rankings Keywords/Keyword Gap and Competitor Research?

- WebSite Auditor

- What do I do if WebSite Auditor freezes/runs out of memory?

- Why some of my pages are not being found by WebSite Auditor?

- How do I change my target keywords for an analyzed page in Page Audit?

- The list of competitors in Page Audit looks wrong/irrelevant

- How do I import a CSV to Keyword Map?

- Using Custom Search: CSS Selectors

- Should I care about HTML/CSS errors?

- SEO SpyGlass

- LinkAssistant

- Adding custom Meta Description for your Link Directory

- Are Nofollow links useful?

- What is Backlink Value and how do I check it?

- How do I import Link Prospects from SEO SpyGlass to LinkAssistant?

- LinkAssistant doesn't find backlinks to my site/doesn't let me add Link Prospects manually

- What's the difference between (Backlink) and (Prospect) factors in LinkAssistant?

- How come LinkAssistant cannot find any Prospects?

- Why is the Backlink Page column empty?

- SEO Reporting

- FAQ & Troubleshooting

- How do I get an invoice for my order?

- How do I change my CC for the subscription?

- Why do I see VAT/Tax on the Checkout?

- I've lost my license keys, what do I do?

- Can I get Customer Support?

- Can I get remote assistance?

- Software auto-update won't complete (or gets stuck in a loop)

- Java Virtual Machine Launcher: Could not create Java Virtual Machine

- I haven't received any order confirmation/license details

- How do I connect my email account to SEO PowerSuite?

- I don't get any data from my Google accounts (Search Console, Analytics, Google Ads)

- How do I report an issue in SEO PowerSuite?

- How do I get a PageSpeed Insights key?

- How to Install SEO PowerSuite

- How to Register SEO PowerSuite

Rank Tracker User Manual

Rank Tracker User Manual- Getting Started

- Domain Analysis

- Keyword Research

- Competitor Research

- SERP Analysis

- Updating Keyword Data

- Checking Rankings

- Keyword Map

- Checking Traffic

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Storing Projects Online

- Search Safety Settings

- Reporting Errors

- Using Tags

- Miscellaneous Settings

WebSite Auditor User Manual

WebSite Auditor User Manual- Application Layout

- Project Setup

- Site Optimization

- Page Optimization

- Page Speed Analysis (Core Web Vitals)

- Sitemap XML

- Robots.txt Settings

- Custom Search

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Crawling Slower Sites

- Reporting Errors

- Storing Projects Online

- Miscellaneous Settings

SEO SpyGlass User Manual

SEO SpyGlass User Manual- Application Layout

- Project Setup

- Backlink Profile Details

- Verifying Backlink Presence

- Checking Backlink Characteristics

- Analyzing Backlink Quality

- Analyzing Penalty Risk

- Disavow Backlinks

- Historical Data

- Domain Comparison & Competitive Analysis

- Bulk Analysis

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

LinkAssistant User Manual

LinkAssistant User Manual- Application Layout

- Creating a Project and Searching for Prospects

- Managing Search Results

- Analyzing Prospects

- Reaching out to Prospects

- Prospect/Backlink Management

- Link Exchange

- Scheduling Checks

- Setting up Alerts

- Managing Reports

- Exporting Data

- Using Tags

- Storing Projects Online

- Miscellaneous Settings

- Managing Events

- Reporting Errors

- Knowledgebase & Troubleshooting

- Installation & Compatibility

- Common Features & Settings

- What is Safe Query Mode and how does it work?

- Why do I see CAPTCHAs while running checks in SEO PowerSuite?

- Why SEO PowerSuite apps are running out of memory?

- Will SEO PowerSuite tools work without Search Algo Updates?

- Can I use anti-CAPTCHA keys in SEO PowerSuite?

- How do I customize my workspaces in SEO PowerSuite apps?

- How do I change the interface language?

- How do I stop getting E-mail Alerts?

- How do I re-run failed tasks in SEO PowerSuite?

- What is InLink Rank and how's it updated?

- How do I check Page Authority and Domain Authority using a MOZ API key?

- How do I request a new Search Engine?

- Rank Tracker

- How do I avoid temp blocks and ensure smooth rank tracking?

- Why rankings are different in Rank Tracker and in a browser?

- What is Visibility in Rank Tracker and how it's being calculated?

- How do I export my rank history from Rank Tracker to Excel?

- How do I import rank history from other tools to Rank Tracker?

- I can't get Number of Searches in Rank Tracker

- Why number of Sessions is lower than Expected Visits?

- Why is my country not on the list of supported regions for Rankings Keywords/Keyword Gap and Competitor Research?

- WebSite Auditor

- What do I do if WebSite Auditor freezes/runs out of memory?

- Why some of my pages are not being found by WebSite Auditor?

- How do I change my target keywords for an analyzed page in Page Audit?

- The list of competitors in Page Audit looks wrong/irrelevant

- How do I import a CSV to Keyword Map?

- Using Custom Search: CSS Selectors

- Should I care about HTML/CSS errors?

- SEO SpyGlass

- LinkAssistant

- Adding custom Meta Description for your Link Directory

- Are Nofollow links useful?

- What is Backlink Value and how do I check it?

- How do I import Link Prospects from SEO SpyGlass to LinkAssistant?

- LinkAssistant doesn't find backlinks to my site/doesn't let me add Link Prospects manually

- What's the difference between (Backlink) and (Prospect) factors in LinkAssistant?

- How come LinkAssistant cannot find any Prospects?

- Why is the Backlink Page column empty?

- SEO Reporting

- FAQ & Troubleshooting

- How do I get an invoice for my order?

- How do I change my CC for the subscription?

- Why do I see VAT/Tax on the Checkout?

- I've lost my license keys, what do I do?

- Can I get Customer Support?

- Can I get remote assistance?

- Software auto-update won't complete (or gets stuck in a loop)

- Java Virtual Machine Launcher: Could not create Java Virtual Machine

- I haven't received any order confirmation/license details

- How do I connect my email account to SEO PowerSuite?

- I don't get any data from my Google accounts (Search Console, Analytics, Google Ads)

- How do I report an issue in SEO PowerSuite?

- How do I get a PageSpeed Insights key?

Crawler Settings

WebSite Auditor is equipped with a powerful SEO spider that crawls your site just like search engine bots do.

Not only it looks at your site's HTML, but also sees your CSS, JavaScript, Flash, images, videos, PDFs, and other resources, both internal and external, and shows you how all pages and resources interlink. This allows you to see the whole picture and make decisions based on the complete comprehensive data.

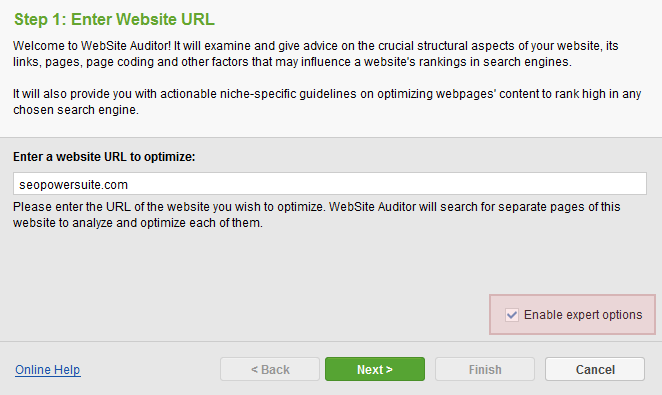

Crawler settings can be tailored to your needs and preferences: for instance, to collect or exclude certain sections of a site, crawl a site on behalf of any search engine bot, find pages that are unlinked from site, etc. To configure the settings, simply tick Enable expert options box once you are creating a project.

In Step 2 you’ll be able to specify crawler settings for the current project.

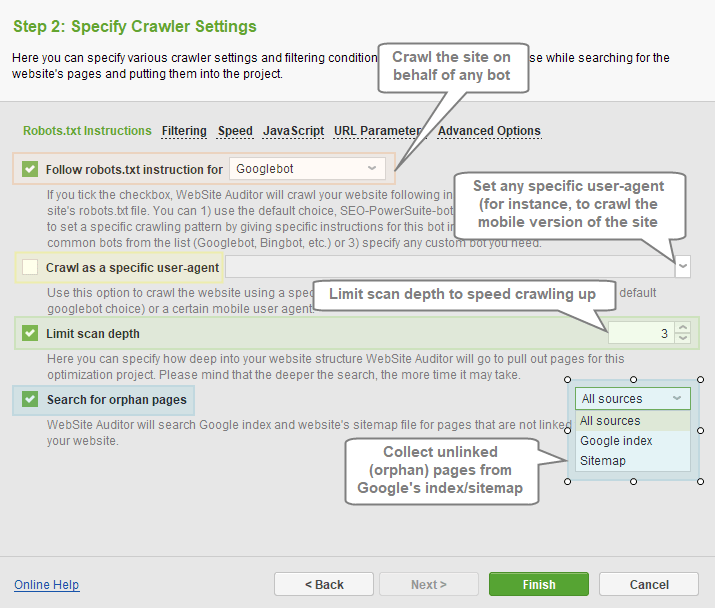

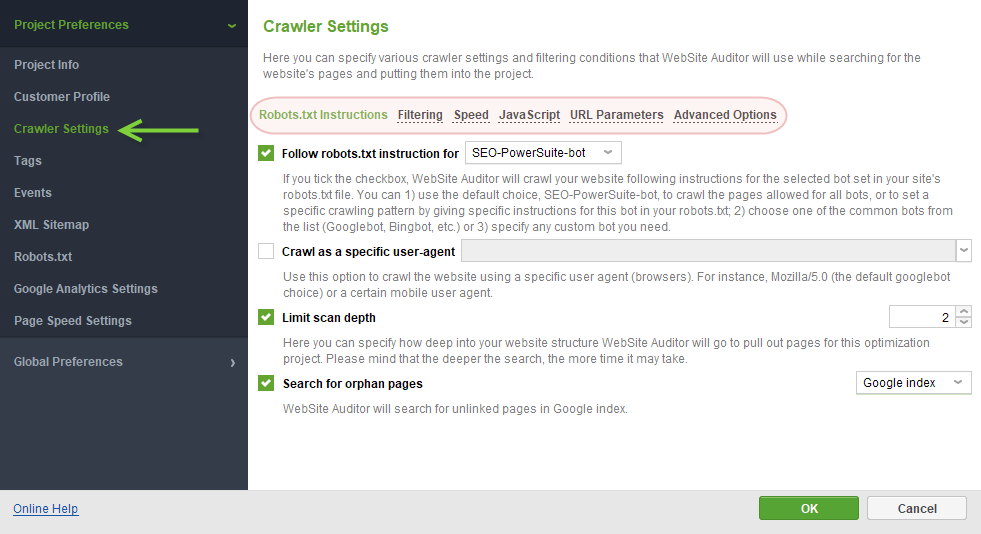

Robots.txt Instructions section features the following settings:

- Follow robots.txt instructions: lets you crawl a site following robots instructions, either for all spiders or any specific bot of your choice (from the dropdown menu).

- Crawl as a specific user-agent: allows to crawl the site using a specific user agent (including mobile agents).

- Limit scan depth: controls how deep into your site’s structure the tool should go to pull the pages for the project. The deeper the search is, the more time the crawl may take.

- Search for orphan pages: allows to collect pages that are not linked to the site, but are present either in Google’s index, or in your Sitemap, or both. Orphan pages will receive corresponding tags in the project, showing the source they were found in.

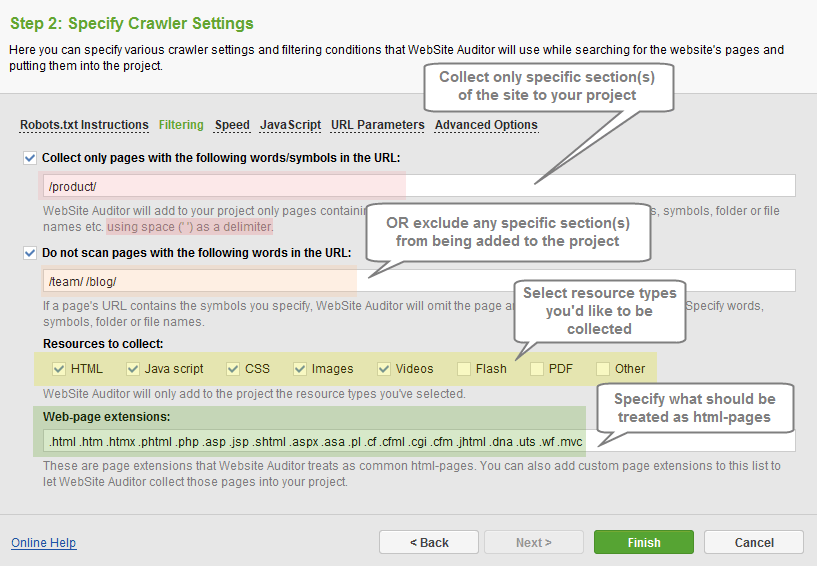

In the Filtering section you can specify various filtering conditions WebSite Auditor will use while collecting pages and resources to your project.

- Collect only pages with the following words/symbols in the URL: only the pages containing specific words/symbols in URLs will be added to your project. Symbols, words, file or category names should be entered using a single space (‘ ‘) as a delimiter.

- Do not scan pages with the following words in the URL: pages containing specific words/symbols in URLs will be excluded from your project. Please note the program will have to crawl the site in full, as the pages to be excluded may contain links to pages that don’t match the conditions; unnecessary pages simply won’t be loaded into the project once the crawl is complete.

- Resources to collect: check or uncheck the types of resources to be collected to the project.

- Web-page extension: specify what should be treated as common html pages. Add custom extensions to let the tool collect those pages into the project.

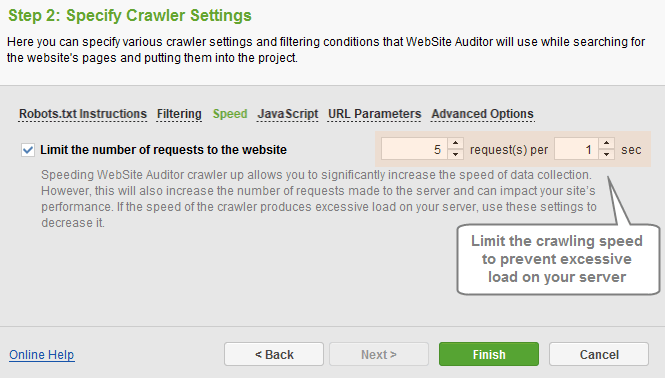

The Speed section allows limit the number of requests to the website, to decrease the load on the server. This prevents slower sites (or sites with high security restrictions) from blocking the crawler.

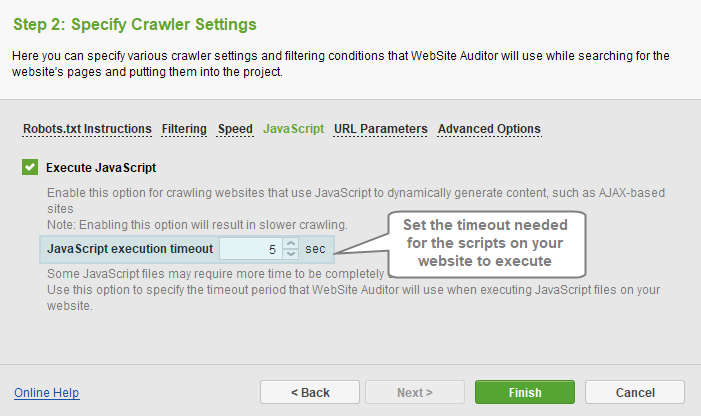

In the JavaScript section, you can control whether the app should execute the scripts while crawling your website pages - this will allow crawling websites built with Ajax, for instance, and/or parsing any script-generated content in full.

- Enable JavaScript to execute scripts.

- Set the timeout long enough for your scripts to be executed.

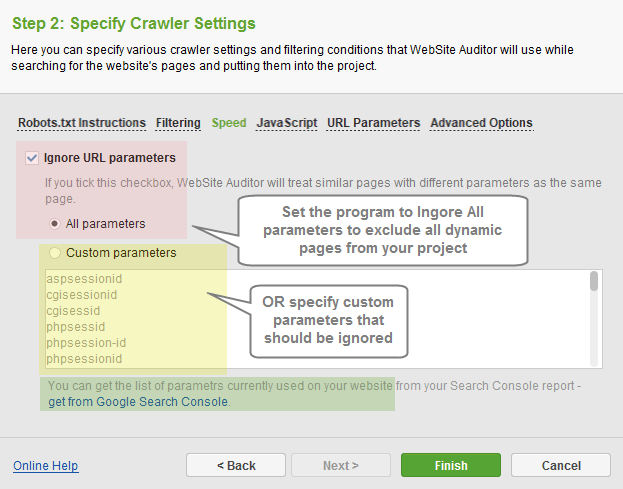

In the URL Parameters section, you can specify whether the program should collect the dynamic pages.

- Disable the option to collect all dynamically generated pages with parameters in URLs into your project.

- Set the program to Ignore All parameters to treat all similar pages with different parameters as the same page (no dynamic pages will be collected).

- Specify Custom parameters that should be ignored (optionally, get the list of parameters used on your site from your Google Search Console account).

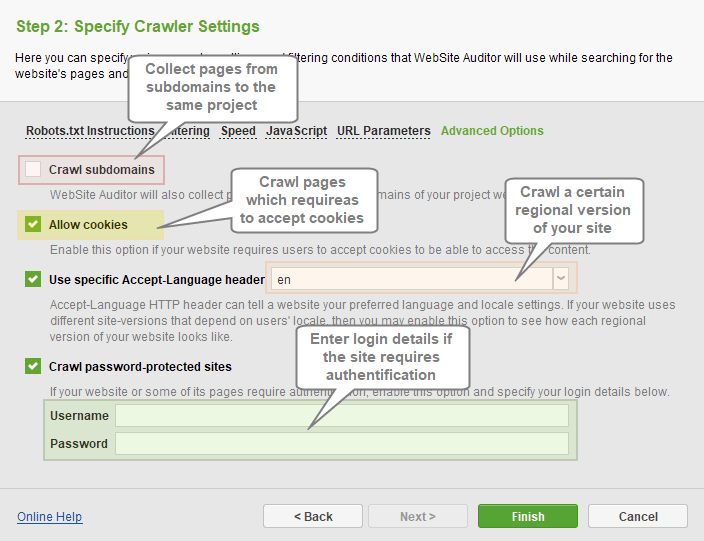

Advanced Options section contains additional crawler settings such as:

- Crawl Subdomains: WebSite Auditor will collect pages that belong to subdomains of your project domain.

- Allow cookies: this will allow you to crawl a website that requires users to accept cookies.

- Use specific Accept-Language header: allows you to crawl certain regional versions of your site.

- Crawl password-protected sites: allows you to enter login credentials if your site or some of its pages require authentication.

After configuring Crawler Settings, hit Finish for the program to start crawling your site. The settings can be accessed and changed any time later under ‘Preferences > Crawler Settings’ in each project.